In the realm of biomedical research, the surge in available data, ranging from basic disease mechanisms to clinical trials, has created both a wealth of information and a challenge in effectively analyzing it. This is where the emergence of Artificial Intelligence (AI) has proven transformative. Leveraging AI algorithms, researchers can decode complex biomedical networks, revealing hidden patterns and relationships that might elude human comprehension. This, in turn, can illuminate a deeper understanding of diseases and improved treatment strategies.

Unveiling Targets via Deep Learning Models

The spotlight in recent years has been on deep learning algorithms, particularly deep neural networks. Comprising multiple hidden layers for successive data processing and feature extraction, these networks have garnered significant attention and success in the pharmaceutical arena. From generative adversarial networks (GANs) to transfer learning techniques, deep learning is proving invaluable in healthcare domains, including small-molecule design, aging research, and drug prediction. Applying this technology to urgent and underserved clinical needs, researchers have identified therapeutic targets for amyotrophic lateral sclerosis (ALS), age-related diseases, and embryonic–fetal transition control. These findings not only enrich our understanding of biology but also hold the promise of novel therapeutic interventions.

AI’s Role in Target Prioritization

To optimize target discovery, AI capitalizes on multi-model approaches using omic (genomics, transcriptomics, proteomics, etc.) and text-based (publications, clinical trials, patents) data. By incorporating diverse information sources, AI refines target lists to align with specific research goals. Moreover, large language models, like BioGPT and ChatPandaGPT, excel in biomedical text mining. These models, pre-trained on extensive text data, rapidly connect diseases, genes, and biological processes, aiding the identification of disease mechanisms, drug targets, and biomarkers. However, while these models accelerate hypothesis generation, they may inadvertently perpetuate human biases and might lack the ability to identify entirely novel targets.

Unlocking Insights with AI-generated Synthetic Data

In the dynamic landscape of data-driven research, AI-generated synthetic data has emerged as a novel and innovative solution with the potential to reshape the way we approach challenges related to rare diseases and underrepresented populations. By ingeniously mimicking real-world patterns and characteristics, synthetic data offers a creative avenue to enhance data completeness, rectify bias issues, and expand our understanding of complex biological scenarios.

A Remedy for Data Scarcity

Rare diseases and underrepresented populations often pose a formidable challenge when it comes to acquiring sufficient and representative data. The scarcity of data can hamper our ability to uncover critical insights and draw accurate conclusions. This is where AI-generated synthetic data steps in as a potent remedy. By leveraging the power of artificial intelligence algorithms, synthetic data can be fashioned to simulate diverse scenarios, effectively bridging the data gap and enabling researchers to explore a broader spectrum of possibilities.

Completing the Data Puzzle

One of the remarkable attributes of AI-generated synthetic data lies in its capacity to enhance data completeness. These synthetic datasets can fill in the gaps left by limited or unavailable real-world data, providing a more comprehensive view of the biological landscape. This newfound completeness can open avenues for analysis and hypothesis testing that were previously impeded by data insufficiency.

Tackling Bias and Imbalance

Bias and imbalances in datasets can distort research outcomes and hinder the development of accurate models. AI-generated synthetic data emerges as an innovative solution to address these issues. By creating data points that represent underrepresented populations or neglected disease categories, synthetic data can rectify the skewed perspectives present in traditional datasets. This has the potential to mitigate bias and offer a more accurate portrayal of complex biological systems.

The Crucial Role of Rigorous Validation

However, the utilization of AI-generated synthetic data necessitates rigorous validation to ensure its relevance and reliability. A multi-faceted approach to validation is essential, encompassing various dimensions of data quality and fidelity. Comparative analyses, wherein synthetic data is systematically compared against real-world data, can provide insights into the alignment of synthetic data with existing knowledge.

Functional Assessments and Expert Insight

Functional assessments serve as a critical pillar of validation. These assessments delve into whether the synthetic data accurately captures the intricate functional relationships and biological mechanisms that underlie real-world systems. Such assessments help gauge the extent to which synthetic data reflects the underlying biology, thereby bolstering its credibility.

Guided by Domain Experts

In the realm of synthetic data validation, the insights of domain experts are invaluable. Expert reviews can shed light on the biological plausibility of the synthetic data, offering a nuanced perspective that complements quantitative analyses. Domain experts possess the capacity to identify subtle nuances and discrepancies that might evade purely algorithmic scrutiny.

Final Assurance of Quality

In the grand endeavor of harnessing AI-generated synthetic data, validation is the linchpin that ensures its quality and reliability. Only through stringent validation processes, characterized by comparative analyses, functional assessments, and expert insights, can synthetic data evolve from a promising concept to a trusted tool that enriches scientific discovery and advances our understanding of rare diseases and underrepresented populations.

Balancing High-Confidence and Novel Targets

The process of selecting therapeutic targets in drug discovery is a complex endeavor that requires a delicate equilibrium between two crucial factors: novelty and confidence. On one hand, there exists a wealth of well-established and high-confidence targets with a solid foundation of research and evidence. On the other hand, the pursuit of novel targets is of paramount importance to drive innovation and uncover uncharted therapeutic avenues. The emergence of AI-assisted techniques, particularly in the realm of natural language processing, is playing a pivotal role in this intricate balancing act.

The Dichotomy of Novelty and Confidence

Established targets, often referred to as “privileged” targets, have a substantial body of supporting evidence and are associated with a higher level of confidence in their therapeutic potential. These targets have been extensively studied, with their roles in disease pathways well-characterized. They offer a predictable avenue for drug development and have been responsible for a significant portion of approved drugs. However, relying solely on established targets could limit the scope of innovation and overlook unexplored therapeutic possibilities.

The Role of Novel Targets in Innovation

Novel targets represent uncharted territory, holding the promise of groundbreaking breakthroughs and paradigm shifts in disease treatment. These targets might offer unique insights into disease mechanisms and pathways, potentially unlocking transformative therapies that were previously unimagined. The pursuit of novel targets is essential for pushing the boundaries of medical science and addressing unmet medical needs, especially in areas where current treatments fall short.

AI-Powered Quantification of Novelty and Confidence

AI-powered natural language processing (NLP) techniques, exemplified by tools like TIN-X, bring a data-driven approach to the challenge of balancing novelty and confidence in target selection. These techniques harness vast amounts of scientific literature, research papers, and clinical reports to quantify and assess the novelty and confidence of potential targets. By analyzing the scarcity of target-associated publications and the strength of the association between a target and a disorder, these tools provide quantitative measures that guide researchers in making informed decisions.

Guiding Target Selection and Drug Repurposing

The quantification of target novelty and confidence by AI-assisted NLP tools like TIN-X offers tangible benefits in target selection and drug repurposing efforts. Researchers can use these measures to navigate the complex landscape of potential targets, identifying those that strike a balance between being novel and having a reasonable degree of confidence. This aids in the selection of targets that have the potential to drive innovation while still being grounded in scientific evidence.

Accelerating Drug Discovery

Moreover, the quantification of target novelty and confidence accelerates drug discovery by streamlining the identification of promising candidates. AI-assisted NLP techniques enable researchers to rapidly identify targets that exhibit novelty in specific disease contexts, facilitating the design of targeted therapies and repurposing existing drugs for new indications. This data-driven approach enhances the efficiency of the drug discovery process while maintaining a focus on both innovation and scientific rigor.

AI-Validated Targets: From Algorithms to Experiments

The journey of targets identified through AI algorithms from the realm of computer simulations to the realm of tangible experimental validation marks a critical step in the drug discovery process. In recent times, AI has been instrumental in proposing potential therapeutic targets for various diseases, and these predictions are now being rigorously tested and validated through a range of experimental approaches.

Confirming AI-Proposed Targets Across Model Systems

Targets identified through AI algorithms span a spectrum of disease contexts, from neurodegenerative disorders like ALS to various age-related diseases. These potential targets have emerged as candidates through the intricate analysis of complex data sets, revealing connections and patterns that might otherwise go unnoticed. To verify the validity of these AI-identified targets, researchers are turning to diverse model systems for validation.

In the case of ALS, AI-driven predictions of therapeutic targets have been put to the test in models ranging from the fruit fly Drosophila to human neuronal cultures. These models allow researchers to assess whether the manipulation of the proposed targets indeed leads to the desired therapeutic effects, validating the AI-generated hypotheses.

Diverse Validation Approaches for Novel Genes and Strategies

The validation process goes beyond confirming therapeutic targets; it extends to the discovery of novel genes associated with diseases and the testing of promising therapeutic strategies. AI algorithms have uncovered previously unknown genes linked to diseases, expanding our understanding of the genetic underpinnings of complex disorders. These novel genes are being validated through techniques such as gene knockout using CRISPR-Cas9 technology in relevant cell types or organisms.

Additionally, promising therapeutic strategies identified through AI, such as the inhibition of specific proteins or pathways, are subjected to rigorous validation experiments. These experiments might involve genetic manipulations, functional assays, and the assessment of therapeutic effects in relevant disease models.

Revolutionizing Laboratories through Automation

In parallel with the advancement of AI in target identification, another transformative trend is the integration of AI with laboratory automation and robotics. The synergy between AI algorithms and robotics is streamlining the traditional laboratory environment, significantly enhancing research efficiency and reproducibility.

Automation is revolutionizing experiments by increasing the rate of data generation, reducing human-induced variations, and improving data quality. This is particularly relevant in the context of validating AI-identified targets, as automation enables researchers to perform large-scale validation experiments with precision and consistency. Automated systems can handle various aspects of experiments, from sample preparation and handling to data collection and analysis.

A Glimpse into the Future of Research

The convergence of AI-driven target identification with advanced laboratory automation has the potential to reshape the landscape of biomedical research. AI-identified targets are no longer confined to algorithms and simulations; they are undergoing rigorous experimental validation to confirm their therapeutic potential. The integration of AI and robotics is expediting the validation process, enabling researchers to conduct experiments on a scale and with a level of precision that was previously unimaginable.

As this trend continues, we can anticipate an acceleration of the drug discovery pipeline, with AI-identified targets transitioning more seamlessly from predictions to clinical applications. The research environment is undergoing a paradigm shift, where the marriage of AI and automation is unlocking new possibilities and speeding up the translation of scientific insights into tangible therapeutic interventions.

Future Outlook and Challenges

AI’s impact is poised to expand further, particularly in addressing complex diseases, infectious outbreaks, and the combination of therapeutic targets. Ethical considerations, data privacy, and AI interpretability remain vital challenges. Moreover, while AI expedites early drug discovery stages, it cannot significantly shrink the time needed for clinical trials, governed by ethical, regulatory, and practical considerations.

Subscribe

to get our

LATEST NEWS

Related Posts

Drug Discovery Biology

Unveiling the Elusive: The Prevalence Problem in Drug Discovery

When it comes to developing new drugs, researchers encounter a formidable challenge: the prevalence problem.

Drug Discovery Biology

Navigating Drug Development: Innovations in Preclinical Testing

As we navigate the complexities of drug development, a harmonious blend of traditional wisdom and innovative technologies will be paramount.

Read More Articles

Synthetic Chemistry’s Potential in Deciphering Antimicrobial Peptides

The saga of antimicrobial peptides unfolds as a testament to scientific ingenuity and therapeutic resilience.

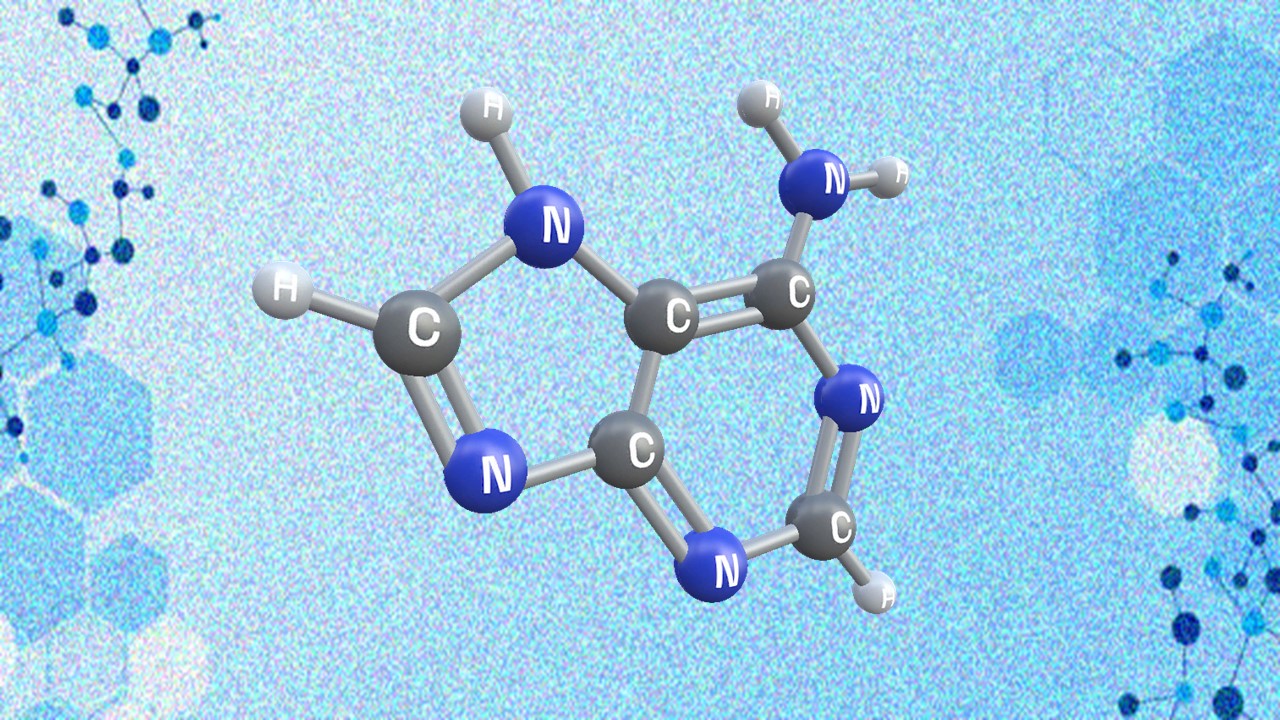

Appreciating the Therapeutic Versatility of the Adenine Scaffold: From Biological Signaling to Disease Treatment

Researchers are utilizing adenine analogs to create potent inhibitors and agonists, targeting vital cellular pathways from cancer to infectious diseases.

Bioavailability and Bioequivalence: The Makings of Similar and “Close Enough” Drug Formulations

Scientists are striving to understand bioavailability complexities to ensure the equivalence of drug formulations from different manufacturers, crucial for clinical effectiveness.