Clinical research continues to face calls to increase the diversity of patient pools. Regulators and patient advocacy groups all demand higher inclusivity in clinical development, but so does common sense: we should be developing drugs with the entire market, in its full diversity, in mind – not a restricted subset. However, reaching out to traditionally excluded and underserved groups is easier said than done. Artificial Intelligence (AI) has made significant forays into the life sciences in the last few years, and many are already examining how it can be employed to improve diversity in clinical investigations – as well as the conclusions that are drawn from them.

We examined the inherent need for diversity in an earlier article, highlighting obvious flaws in medical practice that were borne of a lack of inclusivity in research. These include the long-time association between UV exposure being a contributing factor for skin cancer incidence – something that later turned out to be untrue for many non-white populations. As we progress towards more personalized, precise medicine, it is more important than ever to be cognizant of how products will affect different populations.

Matching Patients to Trials

Traditionally, clinical trials have been heavily concentrated around large urban areas and hospitals that engage with cutting-edge science. Decentralized Clinical Trials already promise to expand the reach of clinical recruitment beyond this limited geography, while removing cost-related barriers such as having to travel to on-site facilities that are often remote for many populations – especially rural, older individuals. AI has a crucial role to play in expanding this reach. Integrated technologies can alert physicians, even those that are not habituated to keeping up-to-date with clinical trials, to the existence of suitable investigations for their patients.

IQVIA’s Vice President for analytics, Lucas Glass, expounded on the subject of patient-matching in an interview with Medical Technology. The key takeaway is that algorithms are already well-suited to matching investigations with patients and ensuring they meet recruitment diversity goals. The problem remains finding a way to insert such matching to clinical workflows. Rolling out suitable technologies throughout the healthcare infrastructure and familiarizing healthcare practitioners with the clinical trial landscape that can offer real-term benefits to their patients will doubtlessly prove pivotal for success.

The Need for Ethical AI

One particularly crucial area of concern for AI in clinical diversity is how AI algorithms are built. While AI has shown potential in removing barriers to research and matching patients to trials that they otherwise would not have had access to, it can also perpetuate existing stereotypes. AI is non-moral, but it can inherit the prejudices of its creators, depending on how the model is constructed. For example, using nonrepresentative datasets to train machine learning algorithms can lead to skewed conclusions and a failure to account for factors such as sex, socioeconomic status, race, and others.

Use-cases of pulse oximeters serve as a good example for illuminating such biases. These are devices which measure blood oxygenation levels by shining light on skin; however, highly pigmented skin can scramble the light and lead to inaccurate readings in darker people. Unsurprisingly, a review of 130 medical AI device post-approval evaluations showed that less than 13% of these reported critical diversity markers such as sex, race or ethnicity. Considering that such medical devices form the backbone of AI model construction for clinical investigations – especially so when they are used at home in the context of a decentralized clinical trial, there is a clear need to do better.

Improving Outcomes for Underserved Populations

A study examined the benefit of training Natural Language Processing (NLP) models on gender-sensitive word embeddings to compensate for the overwhelming representation of men in existing clinical literature. The investigation concluded that NLP algorithms built this way demonstrated significantly improved performance – highlighting how AI can promote diversity as well as improve outcomes for less enfranchised demographics.

Other approaches have also included employing models to predict differences in adverse events across populations. These include AwareDX, a model employed to estimate adverse event variance between men and women. It has long been known that women take longer to metabolize most drugs – leading to higher bioactive pharmaceutical concentrations for a longer period of time. The investigators noted that sex could be replaced with other variables of interest to apply to different aspects of drug discovery – such as age, to differentiate adverse report analysis for pediatrics. Similar efforts will prove crucial in accommodating for increased diversity in clinical development, as well as successfully rolling out new products for diverse populations.

Addressing the AI – Diversity Interface

As the adoption of AI in clinical practice grows, and with good reason, we must strive to increase awareness of its vulnerability to bias. To do so, it is important that pressure is mounted on the part of regulators, funding agencies and publishers to meet diversity requirements. However, a broader educational approach is also needed – we must recognize the biases that we can engender in the models we construct, after all.

Artificial Intelligence presents the opportunity to improve patient recruitment through providing a greater geographical and social reach for clinical trial operators to recruit from – but this can only happen if the entire healthcare industry is engaged with the technology. The long-standing diversity gaps in clinical literature and datasets also present challenges for interpreting historical results and training AI models based on those. Models have already shown they are able to compensate for the biases present in such datasets, if their constructors are aware of them and program them accordingly. While AI offers unique potential in this area – it is not a panacea; as is often the case with diversity, change must first begin with us.

Join Proventa International’s Clinical Operations Strategy Meeting in Boston to hear more on the importance of diversity in Clinical Trials and the role AI/ML models can play in advancing clinical research. Connect with leading experts and discuss cutting-edge topics with industry stakeholders in closed roundtable meetings!

Subscribe

to get our

LATEST NEWS

Related Posts

AI, Data & Technology

The Power of Unsupervised Learning in Healthcare

In healthcare’s dynamic landscape, the pursuit of deeper insights and precision interventions is paramount, where unsupervised learning emerges as a potent tool for revealing hidden data structures.

Clinical Operations

Supervised Learning: Harnessing Data to Revolutionize Patient Care

As healthcare enters a new era, the integration of ML promises to revolutionize oncology and medicine.

Read More Articles

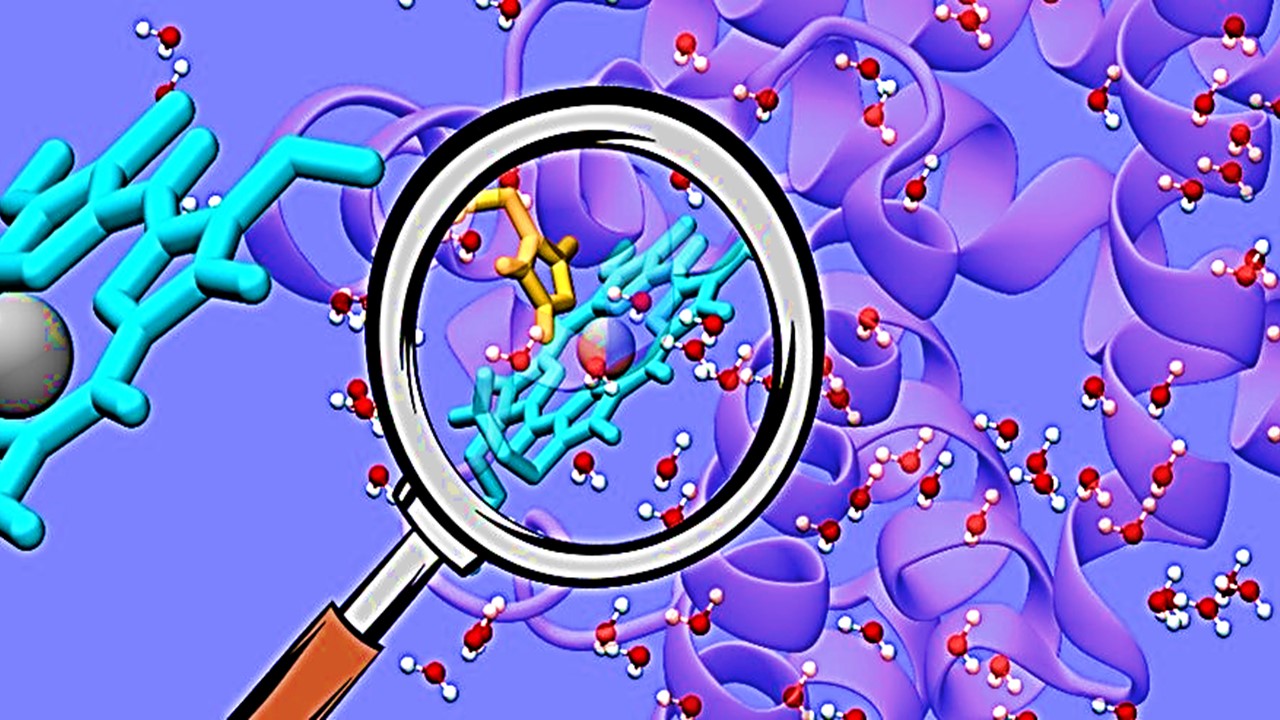

Synthetic Chemistry’s Potential in Deciphering Antimicrobial Peptides

The saga of antimicrobial peptides unfolds as a testament to scientific ingenuity and therapeutic resilience.

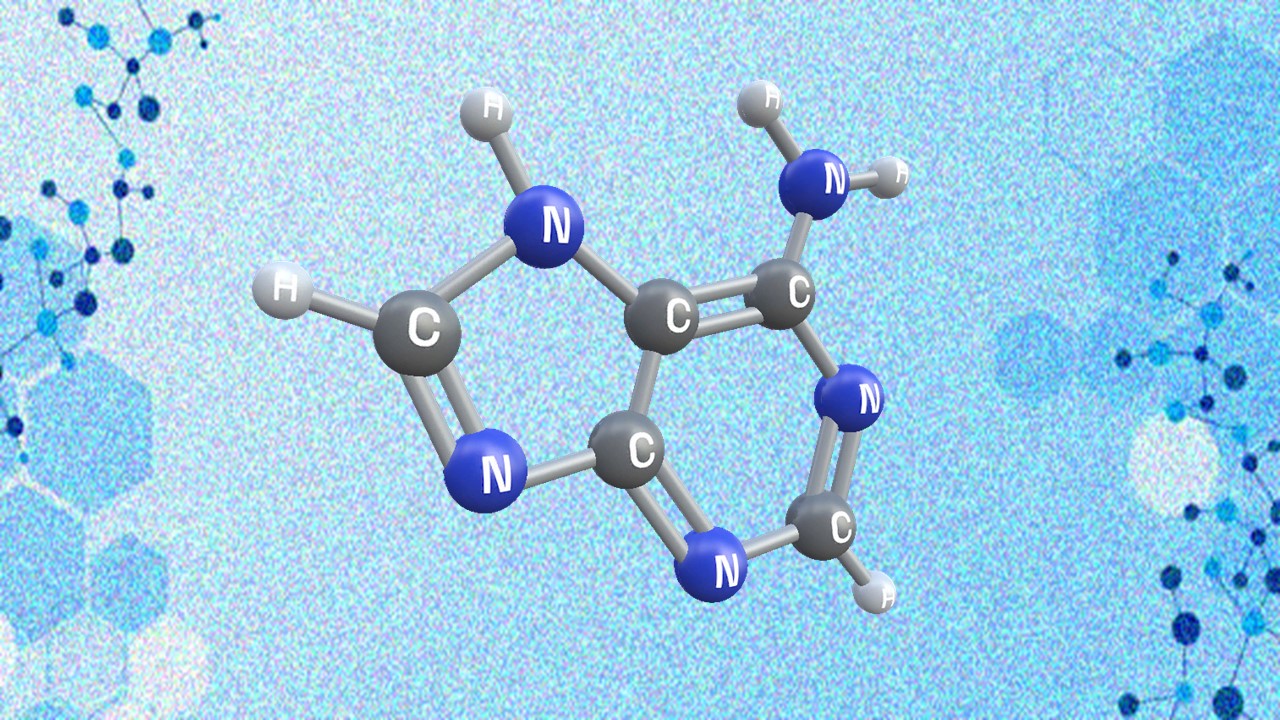

Appreciating the Therapeutic Versatility of the Adenine Scaffold: From Biological Signaling to Disease Treatment

Researchers are utilizing adenine analogs to create potent inhibitors and agonists, targeting vital cellular pathways from cancer to infectious diseases.