Reinforcement learning (RL), a dynamic branch of machine learning (ML), has emerged as a transformative force in the realm of drug discovery. Unlike its static counterparts, supervised and unsupervised learning, reinforcement learning thrives in dynamic environments where it thrives on collecting data through trial and error. By rewarding successful actions and penalizing missteps, reinforcement learning enables machines to fine-tune their decision-making processes. In this article, we delve into the fascinating world of reinforcement learning, with a specific focus on two pivotal algorithms: Q-learning and Monte Carlo tree search (MCTS). These algorithms have ushered in a new era of creativity and innovation in the discovery and design of drug molecules, ultimately offering promising solutions to complex challenges in pharmacology and medicinal chemistry.

General Overview of RL

Reinforcement Learning, as was mentioned, is a subfield of machine learning, and hence, of artificial intelligence (AI) that focuses on how agents can learn to make sequences of decisions in an environment in order to maximize a cumulative reward. It is inspired by behavioral psychology and aims to model how intelligent agents can learn to interact with their surroundings to achieve specific goals. RL is commonly used in tasks where the agent has to make a sequence of decisions over time, such as robotics, game playing, autonomous driving, and recommendation systems.

Important aspects of reinforcement learning are enumerated as follows:

Agent: In RL, an agent is the entity that interacts with the environment. The agent makes decisions and takes actions to achieve a certain goal.

Environment: The environment represents the external context in which the agent operates. It can be anything from a physical world (like a robot navigating a room) to a virtual environment (like a video game or a simulated world). The environment provides feedback to the agent in the form of rewards and state transitions.

State: A state is a representation of the current situation or configuration of the environment. It contains all the relevant information needed for the agent to make decisions. States can be discrete or continuous, depending on the problem.

Action: An action is a choice made by the agent that affects the state of the environment. Actions can be discrete (e.g., moving left or right) or continuous (e.g., controlling the speed of a motor).

Policy: A policy is a strategy or a set of rules that the agent uses to determine which action to take in a given state. The goal of RL is to learn an optimal policy that maximizes the expected cumulative reward over time.

Reward: A reward is a numerical signal that the environment provides to the agent after each action is taken. It quantifies how good or bad the agent’s action was in a particular state. The agent’s objective is to learn a policy that maximizes the cumulative reward over time.

Trajectory or Episode: In RL, an agent interacts with the environment over a series of steps, known as a trajectory or episode. Each episode starts from an initial state, and the agent takes actions to transition between states until a terminal state is reached, at which point the episode ends.

Value Function: The value function is a function that estimates the expected cumulative reward that an agent can achieve from a given state (or state-action pair) when following a particular policy. It helps the agent assess the desirability of states and actions.

Exploration vs. Exploitation: RL agents often face a trade-off between exploring unknown actions to discover better policies (exploration) and exploiting the current knowledge to maximize immediate rewards (exploitation). Balancing this trade-off is a fundamental challenge in RL.

Learning and Adaptation: In reinforcement learning, the agent’s goal is to learn from its interactions with the environment and adapt its policy over time to improve its decision-making.

Reinforcement learning algorithms, such as Q-learning, Policy Gradient methods, and Deep Reinforcement Learning (which combines RL with deep neural networks), are used to train agents to learn optimal policies in various complex and dynamic environments. RL has applications in robotics, game playing, natural language processing, recommendation systems, and many other fields where decision-making in an uncertain environment is required.

Q-Learning: Striking the Balance for Optimal Decision-Making

Q-learning, a prominent application of reinforcement learning, stands as a testament to the potential of this cutting-edge approach. At its core, Q-learning thrives on a delicate equilibrium between ‘exploration’ and ‘exploitation.’ This balance empowers the model to continue learning while maximizing rewards, a crucial trait in the intricate world of drug discovery.

While the computational intensity and the risk of suboptimal results loom as potential challenges, Q-learning has proven its mettle in the pursuit of optimized molecular structures and compound characteristics. It excels in multi-property optimization, forging connections between compounds, and exploring the vast molecular space for promising candidates. Its ability to adapt and evolve in response to ever-changing data positions Q-learning as a valuable asset in the quest for more effective drug molecules.

Monte Carlo Tree Search (MCTS): Navigating the Molecular Landscape

Complementing the prowess of Q-learning, Monte Carlo tree search (MCTS) emerges as another indispensable tool in the arsenal of drug discovery. MCTS, much like Q-learning, relies on the intricate dance between ‘exploration’ and ‘exploitation’ to make informed decisions in complex molecular environments.

Despite its computational intensity and the challenge of striking the right balance, MCTS has proven its mettle in navigating vast and intricate molecular landscapes. Its applications extend beyond the discovery and design of drug candidates; it possesses the unique ability to tailor drugs to specific targets. This level of customization holds immense promise in the pursuit of personalized medicine.

MCTS also shines in retrosynthetic planning, offering a systematic approach to deconstructing complex organic molecules. By streamlining the planning of synthetic routes, the Monte Carlo tree search empowers chemists to design and execute synthesis more efficiently. Moreover, MCTS enhances data mining in drug discovery, unveiling hidden patterns and structures in vast datasets. This capability opens doors to new insights and opportunities that were previously hidden in the data.

A Promising Frontier in Drug Discovery

Reinforcement learning, with its dynamic nature and focus on reward-based learning, has opened up exciting avenues in drug discovery. Algorithms like Q-learning and Monte Carlo tree search have proven their worth by aiding in the discovery and optimization of molecular structures and compound characteristics. They excel in multi-property optimization, relationship identification among compounds, and the exploration of molecular space for potential candidates.

In the ever-evolving field of pharmacology and medicinal chemistry, the integration of reinforcement learning promises to revolutionize drug discovery, offering creative and innovative solutions. As computational power continues to advance, we can anticipate even more sophisticated applications of these algorithms, ultimately leading to the discovery of more effective drug molecules and novel therapeutic strategies. With Q-learning and MCTS at the helm, the future of drug discovery holds the promise of tailored medications, streamlined synthesis processes, and the revelation of hidden insights in vast datasets—a true testament to the transformative power of reinforcement learning in science and medicine.

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

AI, Data & Technology

The Power of Unsupervised Learning in Healthcare

In healthcare’s dynamic landscape, the pursuit of deeper insights and precision interventions is paramount, where unsupervised learning emerges as a potent tool for revealing hidden data structures.

AI, Data & Technology

Unlocking Intelligence: A Journey through Machine Learning

ML stands as a cornerstone of technological advancement, permeating various facets of our daily lives.

Read More Articles

Synthetic Chemistry’s Potential in Deciphering Antimicrobial Peptides

The saga of antimicrobial peptides unfolds as a testament to scientific ingenuity and therapeutic resilience.

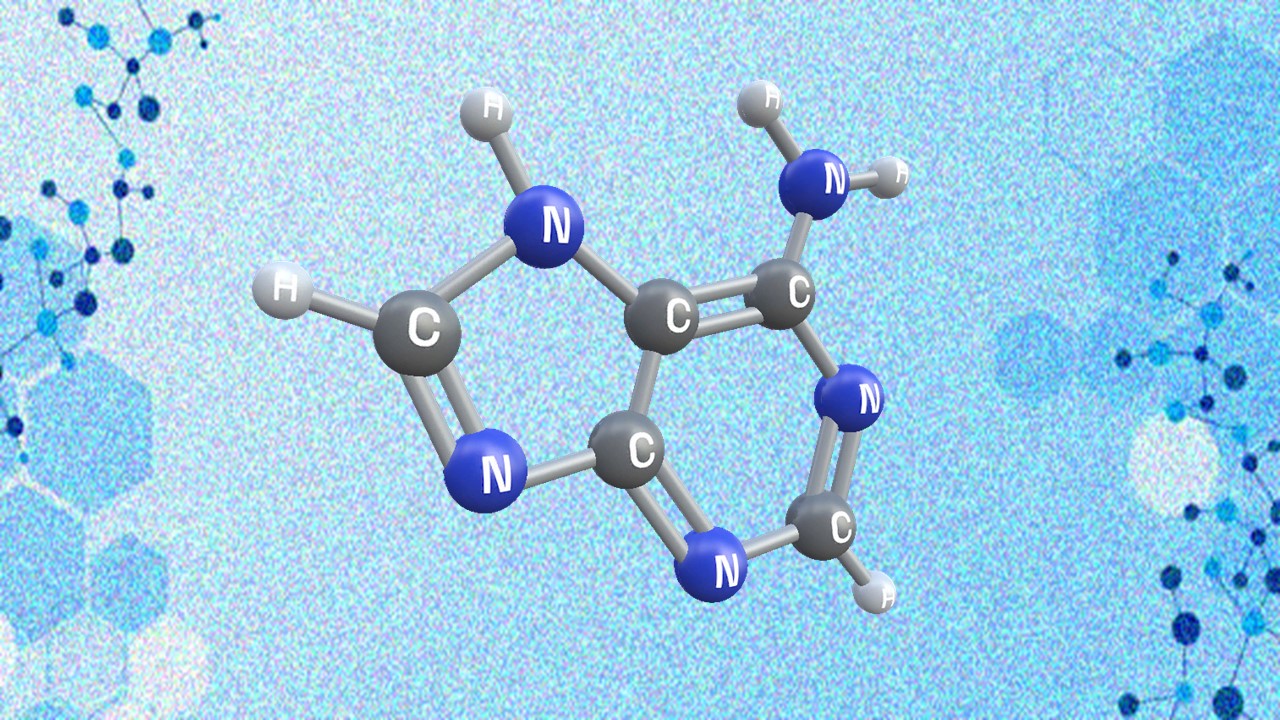

Appreciating the Therapeutic Versatility of the Adenine Scaffold: From Biological Signaling to Disease Treatment

Researchers are utilizing adenine analogs to create potent inhibitors and agonists, targeting vital cellular pathways from cancer to infectious diseases.