In the realm of target discovery, artificial intelligence (AI) stands as a transformative force, leveraging multi-model strategies to enhance its capabilities. Central to this approach is the integration of diverse data types, including omic data such as genomics, transcriptomics, proteomics, and more, along with text-based information from publications, clinical trials, and patents. By harnessing the power of these complementary data sources, AI orchestrates a sophisticated process that fine-tunes target lists to harmonize with specific research objectives.

Harnessing the Power of Multi-Model Approaches

The evolution of AI in target discovery underscores the significance of harnessing diverse dimensions of information to unlock the complexities of disease biology and identify potential therapeutic targets. By integrating a multitude of data types, AI algorithms are able to unravel intricate molecular interactions and unveil novel avenues for intervention.

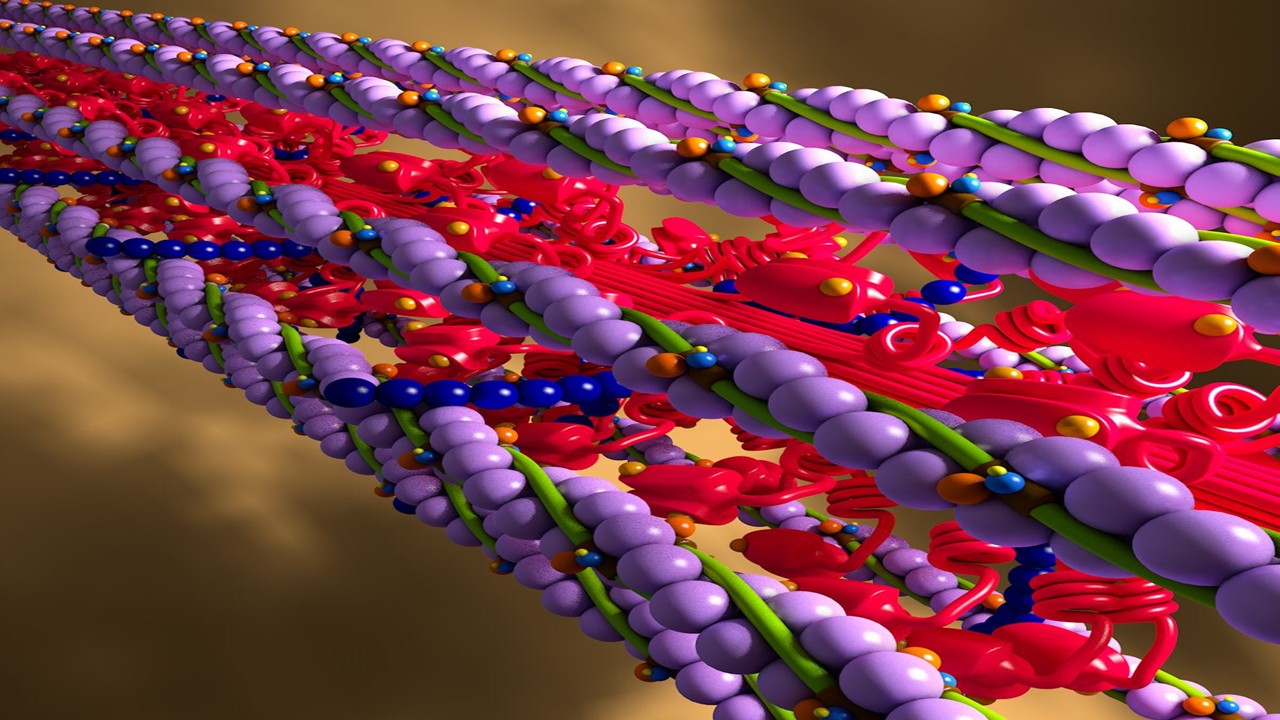

Omics Data: Illuminating Molecular Landscapes

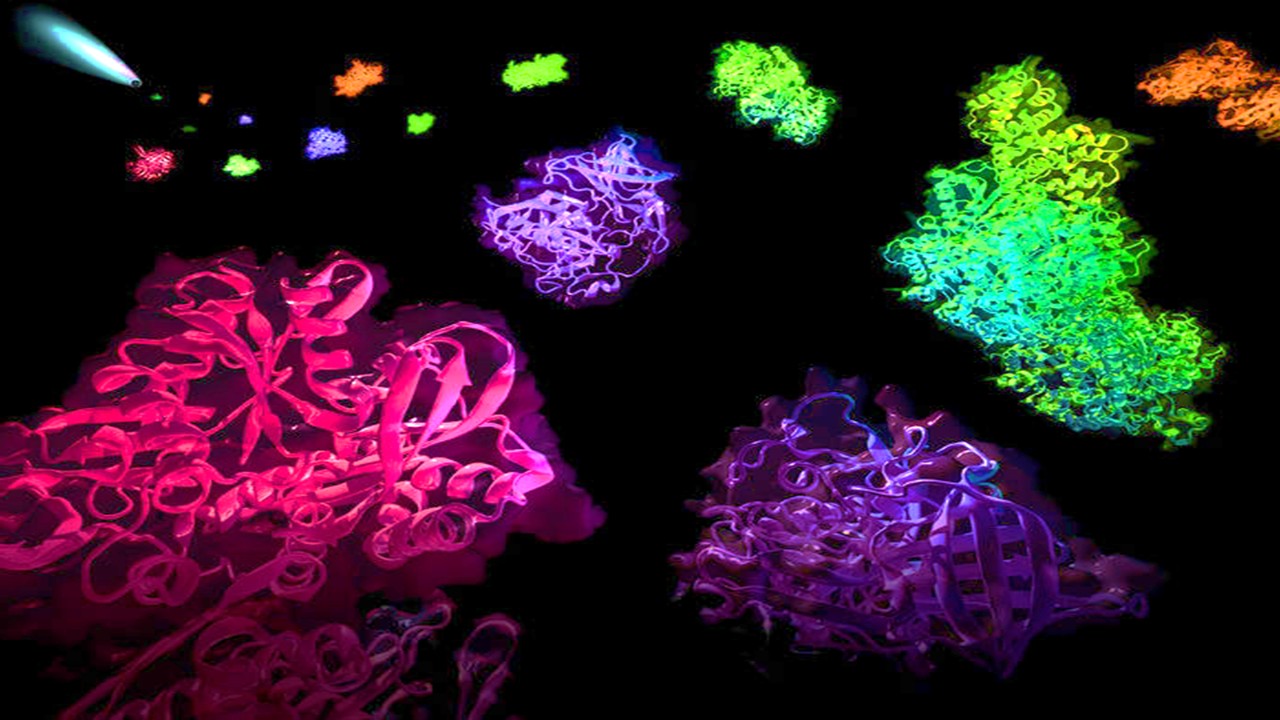

At the heart of this approach lies the integration of omics data, encompassing genomics, transcriptomics, proteomics, and more. Each of these layers of biological information offers a unique perspective on the molecular landscape of diseases. Genomics, for instance, reveals the genetic alterations that underlie disease susceptibility and progression. Transcriptomics delves into the expression patterns of genes, shedding light on which genes are active in diseased states. Proteomics provides insights into the proteins that are produced and their modifications, offering a deeper understanding of functional alterations. Other omics disciplines, such as epigenomics and metabolomics, contribute additional layers of information, further enriching the comprehension of disease mechanisms.

By integrating these omics dimensions, AI algorithms can decipher intricate patterns of molecular dysregulation. These patterns hold the potential to uncover key driver genes, signaling pathways, and critical protein-protein interactions that play pivotal roles in disease development. The holistic analysis of omics data offers a comprehensive view of the molecular intricacies, enabling the identification of nodes within the complex networks that can be targeted for therapeutic intervention.

Text-Based Data: Unveiling Insights from Literature

Complementing the omics data, text-based information extracted from a plethora of sources adds another layer of depth to the target discovery process. Scientific publications, clinical trial records, patents, and other textual resources capture the collective knowledge of the scientific community. AI algorithms excel in processing and mining these texts, connecting the dots between diseases, genes, molecular pathways, and therapeutic strategies.

Text-based data provide a panoramic view of disease mechanisms, treatment modalities, and emerging research trends. They enable researchers to stay abreast of the latest developments, insights, and findings in a specific disease area. By analyzing the relationships and associations within the textual data, AI algorithms can identify hidden connections, propose novel hypotheses, and uncover potential therapeutic targets that might have been overlooked.

Synergy for Comprehensive Target Discovery

The convergence of omics data and text-based information brings forth a synergistic approach to target discovery. While omics data lay the foundation for understanding molecular mechanisms, text-based data contextualize the findings within the broader scientific landscape. AI bridges these dimensions, allowing researchers to explore the interplay between genetic alterations, protein expression changes, and the wealth of knowledge embedded in scientific literature.

This multidimensional approach not only enriches the understanding of disease biology but also enhances the efficiency of target discovery. By leveraging both omics and text-based data, AI algorithms optimize the selection of potential targets that are not only biologically relevant but also supported by substantial evidence from various sources. Ultimately, this synergy holds the promise of accelerating the translation of scientific insights into actionable therapeutic interventions, contributing to the advancement of precision medicine and improved patient care.

Empowering Biomedical Text Mining with Large Language Models

Within the realm of biomedical research, the prominence of biomedical text mining has been accentuated by the emergence of large language models. These models, epitomized by examples such as BioGPT and ChatPandaGPT, have been equipped with an expansive reservoir of textual data extracted from an extensive collection of publications, research papers, medical reports, and scientific literature. This accumulation of knowledge spanning numerous domains of biomedicine empowers these models with the capacity to comprehend and dissect natural language with unparalleled precision.

Textual Enrichment: A Vast Pool of Knowledge

The foundation of these large language models is the colossal corpus of text data they have been trained on. This corpus encompasses a diverse array of sources, including peer-reviewed research articles, clinical studies, case reports, and patent documents. As a result, these models encapsulate a comprehensive cross-section of scientific discourse and findings, collectively representing the state of the art across various fields of biology, medicine, and healthcare.

Natural Language Processing: Unveiling Complex Connections

The pivotal capability that distinguishes these language models is their adeptness at natural language processing (NLP). Through advanced NLP techniques, they can interpret, decipher, and glean meaningful insights from human language, including the intricate terminologies and jargon specific to the biomedical domain. Armed with this linguistic prowess, these models are primed to unravel the interwoven connections between diverse biomedical entities.

Accelerating Link Discovery: Disease, Genes, and Beyond

In the context of target discovery and disease understanding, these large language models function as accelerators, rapidly sifting through vast textual repositories to discern the relationships between diseases, genes, biological processes, and various molecular components. They excel at recognizing the subtle nuances that characterize these connections, identifying not only direct links but also indirect associations and potential intersections.

Aiding Hypothesis Generation: From Data to Insights

Researchers often grapple with the formidable task of navigating the ever-expanding body of scientific literature to identify relevant insights and formulate hypotheses. Large language models serve as invaluable allies in this pursuit. They efficiently synthesize information from diverse sources, facilitating the extraction of knowledge that may have remained concealed amidst the abundance of text.

Catalyzing Discovery: A Transformative Influence

The impact of large language models on biomedical text mining transcends mere efficiency gains. These models amplify the scope of scientific inquiry by enabling researchers to explore intricate relationships and connections on an unprecedented scale. By rapidly uncovering hidden links between diseases and genes or elucidating the molecular underpinnings of complex biological processes, these models expedite the journey from raw data to actionable insights.

Empowering Precision Medicine: Unveiling Therapeutic Avenues

In the pursuit of personalized medicine and targeted therapies, the identification of disease mechanisms and potential therapeutic interventions is of paramount importance. Large language models wield the potential to steer research efforts in these directions by rapidly pinpointing relevant studies, uncovering relevant genes, and contextualizing them within the broader landscape of scientific knowledge.

In essence, the ability of large language models to swiftly retrieve information from the vast expanse of scientific literature is a pivotal force in modern biomedical research. It expedites the journey from data to insights, enables hypothesis generation, and unveils hidden connections that hold the potential to transform the fields of disease understanding, drug discovery, and personalized medicine.

Navigating Ethical and Limitation Frontiers

While large language models offer a powerful tool for accelerating target discovery, their application is not without its challenges. These challenges stem from the inherent nature of these models, their reliance on human-generated text data, and their potential limitations in identifying truly novel therapeutic targets. As we delve deeper into the complexities of AI-driven target discovery, it becomes evident that a balanced perspective is necessary to fully harness their potential.

Biases in the Stream: Propagation of Preconceived Notions

One of the most critical challenges lies in the possibility of unintentionally propagating biases present in the original texts. Large language models are trained on vast amounts of human-generated data, which includes scientific literature and medical reports. These texts may inadvertently contain biases, inaccuracies, and preconceived notions that have been historically embedded in medical discourse. When AI algorithms process such data, they run the risk of perpetuating these biases in their generated insights.

The Risk of Reinforcing Inequality

The perpetuation of biases through AI-generated insights can have significant real-world implications. Biases that are already present in medical literature—such as gender, racial, or socioeconomic biases—can be inadvertently reinforced, leading to disparities in research and healthcare. For example, if historical data disproportionately represents certain population groups, AI-generated insights might prioritize certain diseases or conditions over others, potentially neglecting the health needs of marginalized populations.

The Hunt for Novelty: Limitations in Identifying Truly Novel Targets

While large language models excel at processing and synthesizing existing knowledge, they may struggle to identify genuinely novel therapeutic targets that transcend the boundaries of current scientific understanding. AI models are trained on the text data available up to their training point, which means they might not be equipped to recognize emerging trends, discoveries, or connections that have yet to be published. This limitation raises questions about the extent to which AI-generated insights can truly push the envelope of innovation.

Striking a Balance: Embracing the Power and Limitations

In their pursuit of rapid hypothesis generation and insight extraction, large language models undoubtedly contribute to the acceleration of research. They serve as valuable tools for synthesizing existing knowledge, identifying established relationships, and proposing hypotheses based on available information. However, it is essential to recognize the boundaries of their capabilities and acknowledge that true breakthroughs might still arise from human ingenuity and exploration beyond the confines of existing literature.

A Collaborative Approach: Navigating Biases and Expanding Horizons

Mitigating the challenges associated with biases and limitations requires a collaborative effort. Researchers, AI developers, and domain experts must work hand in hand to ensure that AI-generated insights are critically evaluated, cross-validated with diverse sources, and subjected to rigorous peer review. Transparent and interpretable AI models can help uncover potential biases and shed light on how decisions are being made.

Fostering Ethical AI in Biomedicine

Addressing these challenges also necessitates a commitment to ethical AI development. It involves scrutinizing the training data for biases, continuously refining AI models, and striving for transparency in how AI-generated insights are generated. Moreover, by actively involving underrepresented populations and diverse stakeholders in the AI development process, the risk of perpetuating biases can be minimized, and AI-driven research can become more inclusive and equitable.

Conclusion

AI’s optimization of target discovery through multi-model approaches is an intricate dance between diverse data dimensions. By synthesizing omic and text-based information, AI achieves a synergy that refines the selection of potential therapeutic targets. The contributions of large language models in the form of biomedical text mining are undeniable, revolutionizing the pace at which disease mechanisms are unearthed. However, the cautionary notes of biases and the potential to miss entirely novel targets underscore the need for a balanced approach, where AI-driven insights are combined with domain expertise and other cutting-edge techniques.

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Molecular Biology & Biotechnology

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.

Drug Discovery Biology

Unlocking GPCR Mysteries: How Surface Plasmon Resonance Fragment Screening Revolutionizes Drug Discovery for Membrane Proteins

Surface plasmon resonance has emerged as a cornerstone of fragment-based drug discovery, particularly for GPCRs.

Read More Articles

Designing Better Sugar Stoppers: Engineering Selective α-Glucosidase Inhibitors via Fragment-Based Dynamic Chemistry

One of the most pressing challenges in anti-diabetic therapy is reducing the unpleasant and often debilitating gastrointestinal side effects that accompany α-amylase inhibition.