Decoding Drug Discovery: AI as the Architect of Molecular Innovation

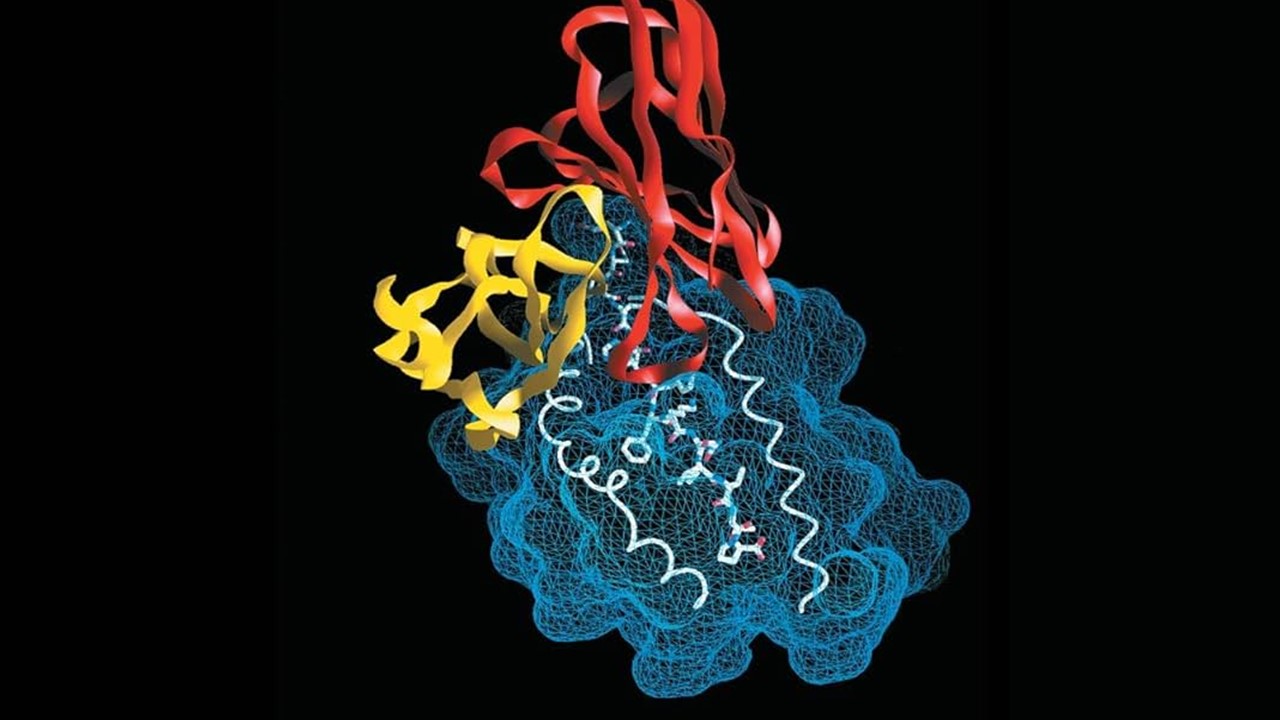

The integration of artificial intelligence into drug discovery has redefined the identification and optimization of therapeutic candidates. Machine learning algorithms analyze vast datasets of chemical structures, genomic sequences, and protein interactions to predict novel drug targets with high specificity. Tools like AlphaFold leverage deep neural networks to predict protein folding patterns, enabling the design of inhibitors for previously undruggable targets such as KRAS mutations in cancer. Generative adversarial networks (GANs) iteratively propose molecular entities with optimal pharmacokinetic properties, prioritizing candidates that balance efficacy and safety. These systems reduce reliance on serendipity, transforming drug discovery from a high-risk gamble into a data-driven engineering discipline.

AI also accelerates preclinical toxicity screening by simulating metabolic pathways and off-target interactions. Platforms like DeepTox evaluate compound libraries for hepatotoxicity or cardiotoxicity, flagging high-risk molecules before costly in vivo testing. However, challenges persist: models trained on historical data may overlook novel biological mechanisms, necessitating hybrid approaches that merge AI predictions with experimental validation. Ethical considerations arise around intellectual property and transparency, as opaque “black-box” algorithms complicate accountability in target selection. Collaborative frameworks between computational biologists and pharmacologists are critical to align AI-driven innovations with clinical relevance.

The repurposing of existing drugs for new indications exemplifies AI’s transformative potential. Knowledge graphs mapping relationships between genes, diseases, and compounds identified baricitinib as a candidate for COVID-19 treatment by analyzing viral entry mechanisms. Such approaches streamline therapeutic development during public health crises, though validation in randomized trials remains essential. As AI democratizes access to target discovery, smaller labs and startups can compete with pharmaceutical giants, fostering a more equitable innovation landscape.

Despite progress, AI cannot yet replicate the nuanced intuition of medicinal chemists. Human oversight ensures that computational predictions align with biological plausibility, avoiding overreliance on algorithmic outputs. The future lies in synergistic partnerships—AI as a hypothesis generator, humans as validators—bridging computational speed with scientific rigor.

Clinical Trials Reimagined: AI-Driven Recruitment, Monitoring, and Analysis

AI is revolutionizing clinical trial design by optimizing protocols to enhance efficiency and inclusivity. Machine learning models analyze historical trial data to refine eligibility criteria, avoiding overly restrictive parameters that delay recruitment or exclude underrepresented demographics. For example, reinforcement learning dynamically adjusts chemotherapy regimens in oncology trials, balancing efficacy and toxicity based on real-time patient responses. Such adaptive designs reduce trial durations and improve outcomes by personalizing interventions within study frameworks.

Patient recruitment is accelerated through natural language processing (NLP), which scans electronic health records (EHRs) to identify candidates matching trial requirements. IBM Watson demonstrated this capability in breast cancer trials, achieving screening accuracy comparable to manual methods. AI also addresses rare diseases by mining global databases to locate eligible participants, mitigating recruitment bottlenecks. Post-enrollment, wearable devices and mobile apps monitor adherence, using computer vision to confirm medication ingestion and track physiological responses in real time.

Real-time safety monitoring via AI minimizes adverse event risks. Algorithms detect subtle biomarker shifts or drug interactions, alerting investigators to intervene proactively. Post-trial, AI meta-analyzers synthesize data across studies, uncovering heterogeneous treatment effects obscured by conventional statistical methods. However, AI-enriched trials risk bias if models prioritize high-event-rate populations, skewing generalizability. Regulatory bodies now mandate transparency in AI-driven trial design to ensure findings reflect real-world applicability.

Ethical challenges include data privacy and informed consent, particularly when AI processes sensitive genomic or biometric data. Robust encryption and federated learning techniques, which train models on decentralized datasets, mitigate these risks. The future envisions AI not only streamlining trials but also democratizing participation, ensuring diverse representation in clinical research.

Precision Medicine: Tailoring Therapies with Pharmacogenomic AI

AI’s capacity to decode genetic variability is ushering in an era of hyper-personalized therapeutics. Machine learning models predict individual responses to drugs by analyzing polymorphisms in metabolizing enzymes like CYP2D6, which influences 20% of marketed medications. Convolutional neural networks assess haplotype function, guiding dose adjustments for tamoxifen in breast cancer patients to minimize recurrence risks. These tools transcend one-size-fits-all dosing, optimizing regimens based on genetic, demographic, and clinical variables.

Reinforcement learning algorithms personalize dosing for narrow-therapeutic-index drugs such as vancomycin or warfarin. Trained on EHR data, these models adjust doses in real time using renal function, serum levels, and comorbidities, reducing toxicity while maintaining efficacy. In transplantation, AI predicts immunosuppressant AUC metrics more accurately than Bayesian methods, tailoring tacrolimus regimens to individual metabolic profiles. Such precision mitigates graft rejection and adverse effects, improving long-term outcomes.

AI also navigates polypharmacy in multimorbid patients, a population often excluded from trials. Random forest models stratify heart failure cohorts into clusters with distinct responses to beta-blockers or ACE inhibitors, enabling targeted therapies. These advancements address the “evidence gap” between homogeneous trial populations and heterogeneous real-world patients. Ethical dilemmas arise in data ownership and consent, particularly when AI requires genomic data. Ensuring equitable access to precision medicine is critical to prevent exacerbating healthcare disparities.

Future systems may integrate real-time biosensor data with AI predictions, enabling dynamic dose adjustments for chronic conditions like diabetes or hypertension. However, clinician trust remains a barrier; interpretable models and rigorous validation are essential to foster adoption.

Pharmacovigilance and Toxicology: AI as a Sentinel for Drug Safety

AI has transformed pharmacovigilance by detecting adverse drug reactions (ADRs) from disparate, unstructured data sources. Natural language processing (NLP) algorithms mine EHR narratives, social media, and regulatory databases like the UK Yellow Card Scheme to identify emerging safety signals. For instance, AI flagged cardiovascular risks associated with COX-2 inhibitors years before traditional surveillance methods, demonstrating its predictive power. These systems analyze temporal and contextual patterns to distinguish causal ADRs from coincidental correlations, though false positives remain a challenge.

In clinical toxicology, AI classifies toxidromes—such as anticholinergic or opioid overdoses—from clinical notes, aiding emergency decision-making. Rules-based models differentiate toxidromes with accuracy nearing human clinicians, though they currently serve as adjuncts rather than replacements. Future systems may integrate biosensor data to predict toxicity trajectories, enabling preemptive interventions. AI also enhances poison control by predicting drug interactions in polypharmacy patients, reducing hospitalization rates.

Challenges include variability in EHR documentation and noise in social media data. Robust validation frameworks are essential to ensure AI models generalize across healthcare systems. Ethical considerations demand transparency in signal detection methodologies to avoid unjustified drug withdrawals or stigmatization.

Regulatory agencies now employ AI to analyze post-marketing surveillance data, identifying rare or long-term ADRs missed in trials. This proactive approach balances drug innovation with patient safety, though human oversight remains critical to interpret AI findings within clinical contexts.

Ethical Imperatives: Navigating Bias, Privacy, and Accountability

The deployment of AI in clinical pharmacology necessitates rigorous ethical frameworks to mitigate bias, ensure privacy, and clarify accountability. Algorithmic bias arises when models are trained on non-representative data—for example, genomic datasets skewed toward European populations. This underperformance in diverse cohorts perpetuates healthcare disparities, as seen in AI tools that underestimate cardiovascular risks in Black patients. Mitigation strategies include federated learning, which trains models on decentralized data without compromising privacy, and audit frameworks to monitor performance across demographics.

Data privacy is paramount when handling sensitive genetic or biometric information. Differential privacy techniques anonymize datasets by adding statistical noise, while homomorphic encryption allows computation on encrypted data. These methods protect patient confidentiality but require computational resources that may limit scalability. Regulatory compliance with GDPR and HIPAA is non-negotiable, though global harmonization of standards remains elusive.

Accountability in AI-driven decisions is complicated by the opacity of deep learning models. Explainable AI (XAI) techniques, such as saliency maps or feature attribution, demystify neural network decisions, fostering clinician trust. Legal liability remains contentious: when an AI dosing algorithm errs, responsibility may fall on clinicians, developers, or regulators. Clear guidelines are needed to delineate roles and ensure patient recourse.

Ethical AI deployment also demands inclusivity in development teams. Diverse perspectives prevent blind spots in model design, ensuring tools address global health needs rather than privileging high-resource settings. Public engagement initiatives educate patients about AI’s role in their care, building trust through transparency.

The Road Ahead: Human-AI Synergy and Continuous Learning

The future of clinical pharmacology hinges on symbiotic human-AI collaboration, where algorithms augment rather than replace clinician expertise. “Human-in-the-loop” systems integrate AI predictions with practitioner judgment, particularly in complex cases like polypharmacy or rare diseases. For example, AI could propose anticoagulation regimens adjusted by clinicians based on patient preferences or comorbidities, balancing algorithmic precision with human empathy.

Continuous learning systems, which update models in real time using incoming data, address “concept drift” caused by evolving clinical practices. However, these systems risk embedding new biases if not rigorously monitored. AI “vigilance” frameworks, akin to pharmacovigilance, will track model performance post-deployment, flagging degradations for recalibration.

Education is pivotal to this transition. Medical curricula must embed AI literacy, teaching clinicians to critically evaluate tools and advocate for equitable deployment. Professional societies are developing certification programs to standardize competency, ensuring practitioners harness AI responsibly.

Ultimately, AI’s success depends on trust. Transparent models, ethical governance, and inclusive design will ensure AI-driven pharmacology benefits all patients, heralding an era of safer, more precise, and universally accessible therapeutics.

Study DOI: https://doi.org/10.3389/jpps.2024.12671

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Medicinal Chemistry & Pharmacology

Aerogel Pharmaceutics Reimagined: How Chitosan-Based Aerogels and Hybrid Computational Models Are Reshaping Nasal Drug Delivery Systems

Simulating with precision and formulating with insight, the future of pharmacology becomes not just predictive but programmable, one cell at a time.

Medicinal Chemistry & Pharmacology

Coprocessed for Compression: Reengineering Metformin Hydrochloride with Hydroxypropyl Cellulose via Coprecipitation for Direct Compression Enhancement

In manufacturing, minimizing granulation lines, drying tunnels, and multiple milling stages reduces equipment costs, process footprint, and energy consumption.

Medicinal Chemistry & Pharmacology

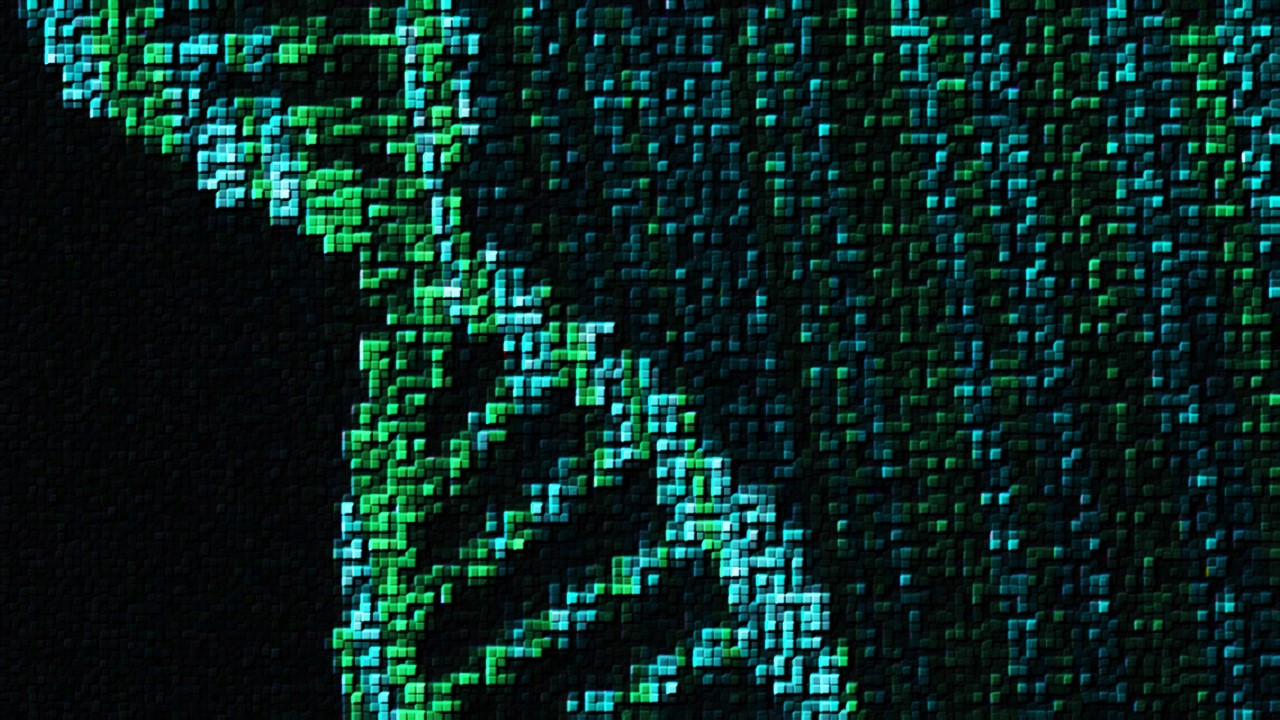

Decoding Molecular Libraries: Error-Resilient Sequencing Analysis and Multidimensional Pattern Recognition

tagFinder exemplifies the convergence of computational innovation and chemical biology, offering a robust framework to navigate the complexities of DNA-encoded science