Machine Learning’s Role in Preclinical Drug Discovery

Machine learning accelerates target identification by mining vast biological datasets for latent gene-disease relationships. Algorithms parse sparse data points and unstructured text from scientific literature, identifying therapeutic targets with higher precision than manual curation. Graph neural networks simulate molecular interactions to optimize drug candidates within biological systems, reducing reliance on trial-and-error synthesis. Bayesian models reverse-engineer drug mechanisms, as seen in repurposing an anticancer compound for pheochromocytoma. However, proprietary platforms like autonomous labs lack peer-reviewed validation, leaving gaps in reproducibility.

Translational research benefits from ML’s ability to interpret high-dimensional data from benchtop experiments. Autonomous systems iteratively design experiments, merging computational predictions with empirical validation to prioritize hypotheses. One AI-developed compound for obsessive-compulsive disorder reportedly bypassed 2,000 candidates in 12 months, yet its opaque development limits scientific scrutiny. Without published methodologies, such claims remain anecdotal rather than foundational. This highlights the tension between commercial innovation and academic rigor in preclinical research.

ML-driven simulations optimize clinical trial protocols by modeling treatment regimens and inclusion criteria. Reinforcement learning has been applied to Alzheimer’s and lung cancer trials, predicting optimal dosing schedules. Start-ups like Trials.AI use natural language processing to flag protocol ambiguities, potentially reducing operational delays. However, these tools operate without peer-reviewed validation, raising concerns about regulatory acceptance. The absence of transparent benchmarks stifles trust in ML-enhanced trial design.

Challenges persist in translating preclinical ML models to clinical settings. Data fragmentation and regulatory hesitancy slow adoption, particularly in trial planning. Cross-sector partnerships are critical to validate algorithms against traditional methods. Until ML’s preclinical contributions are empirically demonstrated, its impact will remain theoretical rather than transformative. The future of preclinical ML hinges on open collaboration and transparent validation.

Federated learning frameworks could pool disparate datasets while preserving privacy. Regulatory agencies must clarify pathways for ML-integrated protocols to ensure safety without stifling innovation. Autonomous labs must publish validation studies to bridge the gap between proprietary tools and academic standards. Collaborative consortia, like MELODDY, exemplify how shared data can accelerate model training. Without these steps, ML risks becoming a fragmented tool rather than a unified advancement.

Precision Cohort Selection via Unsupervised Learning

Unsupervised ML identifies phenotypic subtypes within heterogeneous patient populations using EHR and genetic data. For type 2 diabetes, clustering algorithms revealed three distinct subgroups with divergent therapeutic needs. This stratification minimizes non-responders, enhancing trial power while reducing participant risk. Start-ups like Bullfrog AI claim to predict responders using clinical trial datasets, but conflating prediction with selection risks overlooking unforeseen beneficiaries. Overly restrictive criteria may exclude marginalized populations, skewing post-market applicability.

Natural language processing enhances cohort selection by matching unstructured EHR narratives to trial criteria. Cross-modal algorithms encode text and tabular data into shared latent spaces, improving patient-trial alignment. Commercial tools like Mendel.AI automate this process but inherit biases from underlying EHR documentation patterns. For example, rural patients with sparse healthcare interactions may be systematically excluded. Continuous auditing is essential to mitigate algorithmic discrimination.

ML’s reliance on real-world data introduces ethical dilemmas. Biased historical datasets may perpetuate inequities in cohort selection. Techniques like counterfactual fairness adjust models to account for underrepresented groups, but implementation remains nascent. Regulatory frameworks must mandate bias audits for ML tools used in trial recruitment. Comparative studies evaluating ML against traditional selection methods are scarce.

Retrospective analyses suggest ML reduces screen failures by 15–20%, but prospective validation is lacking. Without evidence, sponsors hesitate to adopt ML-driven recruitment strategies. Future advancements require standardized benchmarks for fairness and efficacy. Open-source algorithms trained on diverse datasets could democratize access to precision cohort selection. Collaboration between regulators and developers is critical to establish trust in these tools.

A case study in oncology trials demonstrated ML’s ability to refine inclusion criteria. Unsupervised learning identified tumor microenvironment signatures predictive of drug response. This reduced trial enrollment time by 30% while maintaining statistical power. However, the model’s reliance on single-institution data limited its external validity. Multi-center validation studies are now underway to address this gap.

ML-Driven Participant Retention and Adherence

AiCure’s facial recognition software monitors medication adherence via smartphone cameras, outperforming direct observation in schizophrenia trials. The tool flags missed doses in real time, enabling timely interventions. However, privacy concerns and device access barriers may exclude socioeconomically disadvantaged participants. Biometric data collection also risks algorithmic bias, as seen in racial disparities in computer vision performance. Ethical deployment requires balancing surveillance with participant autonomy.

Passive data collection via wearables reduces participant burden by extracting biomarkers from real-world activities. Accelerometer data correlates with Parkinson’s disease progression, while photoplethysmograms predict cardiovascular events. These digital endpoints require validation against gold-standard clinical assessments. Regulatory agencies like the FDA are developing frameworks to qualify wearable-derived biomarkers for regulatory trials. Standardization of sensor data preprocessing pipelines is critical for reproducibility.

Natural language processing repurposes clinical notes for automated case report form entry, minimizing manual errors. In epilepsy trials, NLP auto-populates seizure frequency data from physician narratives. However, interoperability challenges persist across EHR systems, limiting generalizability. Standardized documentation practices are critical for scalable NLP solutions. Collaborative efforts like FHIR aim to harmonize data structures across institutions.

ML identifies participants at risk of drop-out using predictive analytics. Models analyze engagement patterns, such as missed visits or survey delays, to trigger retention interventions. Yet prospectively validated models are rare, and overfitting to historical data remains a concern. A recent trial in depression research used reinforcement learning to personalize retention strategies. Early results show a 25% reduction in attrition compared to standard protocols.

Ethical deployment demands transparency in data usage and participant consent. Overreliance on commercial tools lacking peer review risks embedding undetected biases. Regulatory guidance on informed consent for ML-driven monitoring is still evolving. Participants must understand how biometric data will be stored, analyzed, and shared. Failure to address these concerns may erode public trust in clinical research.

Data Collection and Management Innovations

Wearables generate sparse, asynchronous data streams requiring specialized preprocessing. Deep neural networks denoise single-lead ECG signals, while recurrent models analyze accelerometer data for movement disorders. These pipelines transform raw sensor output into research-grade endpoints but demand computational resources inaccessible to smaller institutions. Cloud-based platforms like AWS HealthLake offer scalable solutions but raise data sovereignty concerns. Balancing accessibility with security remains a persistent challenge.

Generative adversarial networks (GANs) prioritize high-risk patients for invasive procedures. In histopathology, GANs identify slides requiring multiplexed imaging, reducing costs and participant burden. However, model drift over time necessitates continuous recalibration, complicating clinical implementation. Federated learning frameworks allow decentralized model updates without centralizing sensitive data. This approach preserves privacy while maintaining model accuracy across diverse populations.

ML addresses missing data through imputation and synthetic data generation. Techniques like multiple imputation by chained equations (MICE) infer missing values, but overreliance risks biased estimates. Synthetic data generated via GANs can augment small datasets but must mimic real-world variability. Validation against ground-truth data is essential to ensure synthetic datasets retain clinical relevance. Regulatory agencies have yet to establish clear guidelines for synthetic data use in trials.

Automated quality control algorithms flag anomalous data patterns in real time. Auto-encoders detect implausible lab values or outliers, enabling rapid corrective action. These tools are particularly valuable in multicenter trials with variable data collection practices. However, overly sensitive models may flag legitimate outliers, delaying database lock. Threshold tuning based on trial-specific error rates is critical to balance sensitivity and specificity.

Regulatory uncertainty surrounds ML-processed data. The FDA’s SaMD framework categorizes algorithms by risk but lacks specificity for clinical research contexts. Clear guidelines are needed to harmonize innovation with patient safety. Sponsors must demonstrate equivalence between ML-derived endpoints and traditional measures. Until standardization occurs, adoption will remain fragmented across therapeutic areas.

Advanced Analytical Techniques for Trial Data

Unsupervised learning identifies latent patient clusters in real-world data, informing hypothesis generation. In heart failure trials, cluster analysis revealed subgroups with differential responses to resynchronization therapy. These insights guide personalized trial designs but require prospective validation. ML-powered phenotyping reduces noise from heterogeneous populations, enhancing signal detection. However, overclustering may fragment cohorts into non-actionable subgroups.

Random forests capture nonlinear interactions in treatment effect heterogeneity. In the COMPANION trial, random forests outperformed logistic regression in predicting CRT response. ML-enhanced secondary analyses reduce false discovery rates by modeling complex feature relationships. Feature importance rankings derived from SHAP values highlight biomarkers driving treatment outcomes. Interpretability tools must evolve to keep pace with increasingly complex models.

Causal inference methods like targeted maximum likelihood estimation (TMLE) derive insights from observational data. TMLE adjusts for confounding variables in EHR-derived cohorts, approximating randomized trial conditions. However, unmeasured confounders limit reliability, necessitating cautious interpretation. Sensitivity analyses quantify the impact of unobserved variables on effect estimates. These techniques bridge the gap between real-world evidence and traditional trial data.

Reinforcement learning optimizes adaptive trial designs. Models simulate patient responses to dynamically adjust dosing or eligibility criteria. While promising, computational complexity and regulatory hurdles hinder widespread adoption. The I-SPY 2 trial exemplifies adaptive design success but relies on traditional statistics, not ML. Hybrid approaches combining ML with Bayesian methods may offer a pragmatic path forward.

Interoperability barriers fragment data liquidity. Initiatives like FHIR and OMOP standardize EHR structures, but adoption remains uneven. Cross-institutional data sharing agreements are essential for scalable ML analytics. Blockchain technology could enable secure, auditable data exchanges between stakeholders. Without interoperability, ML’s analytical potential will remain siloed and underutilized.

Operational and Ethical Barriers to ML Integration

Data scarcity and siloing limit ML’s generalizability. Public repositories like MIMIC-III focus on critical care, lacking diversity for broader applications. Temporal drift in EHR data—shifting coding practices or diagnostic criteria—further complicates model training. Transfer learning adapts models to new datasets but requires careful calibration. Data augmentation techniques like synthetic minority oversampling (SMOTE) mitigate class imbalance.

Explainability tools like saliency maps demystify model decisions but often provide post hoc rationalizations. Clinicians distrust “black box” algorithms, particularly in high-stakes trial settings. Trustworthiness, built through validation and real-world performance tracking, may outweigh explainability. Model cards documenting training data, metrics, and limitations enhance transparency. Regulatory mandates for explainability vary globally, creating compliance challenges for multinational trials.

Regulatory ambiguity stifles investment. The FDA’s case-by-case evaluations of ML tools create uncertainty for sponsors. Adaptive frameworks allowing continuous learning while monitoring for drift could balance innovation and safety. The EU’s GDPR imposes strict requirements for algorithmic transparency, complicating cross-border collaborations. Harmonized international guidelines are urgently needed to streamline ML adoption.

Bias amplification remains a critical risk. Models trained on historically biased data may perpetuate inequities in cohort selection or endpoint adjudication. De-biasing techniques, such as adversarial training, require integration into ML pipelines. Prospective audits comparing outcomes across demographic subgroups are essential. Failure to address bias risks eroding public trust and regulatory goodwill.

Ethical review boards lack expertise to evaluate ML protocols. Training programs and standardized audit frameworks are needed to ensure ethical ML deployment. Participant consent forms must explicitly address data usage in ML workflows. Dynamic consent platforms allow participants to adjust permissions as trials evolve. Without ethical oversight, ML risks becoming a tool of exclusion rather than inclusion.

Future Directions and Collaborative Imperatives

Hybrid trial designs merge ML-enhanced exploratory phases with traditional confirmatory methods. ML identifies promising subgroups or endpoints, which are then validated in randomized cohorts. This approach balances innovation with regulatory rigor. The RECOVERY trial’s adaptive design during COVID-19 offers a template for future integration. Scalability depends on interoperable data infrastructure and regulatory buy-in.

Global consortia like MELODDY pool preclinical data while protecting proprietary interests. Such collaborations accelerate model training but require robust data governance frameworks to ensure equity. Federated learning enables cross-border collaboration without centralizing sensitive data. However, disparities in computational resources between institutions threaten equitable participation. Funding mechanisms must prioritize capacity-building in low-resource settings.

Synthetic data generation could overcome privacy and scarcity challenges. GANs create realistic patient datasets for algorithm training, though validation against real-world data remains essential. Regulatory agencies are piloting synthetic data acceptance for early-phase trials. Ethical concerns about data provenance and consent persist, requiring clear governance. Synthetic data’s role in regulatory submissions remains undefined but promising.

Regulatory agencies must clarify ML validation standards. Model cards detailing training data, performance metrics, and ethical considerations could standardize reporting. The FDA’s Pre-Cert program for SaMD offers a potential blueprint for clinical research tools. Real-world performance monitoring via digital twins could provide continuous validation post-deployment. Proactive engagement between regulators and developers is critical to avoid stagnation.

The ethical imperative to streamline trials demands urgent action. ML’s potential to reduce costs and accelerate therapies must be weighed against risks of bias and inequity. Cross-disciplinary collaboration—uniting clinicians, data scientists, and ethicists—is the only path forward. Public-private partnerships can fund high-risk, high-reward ML applications in neglected diseases. Without collective action, ML’s promise will remain fragmented and unrealized.

Study DOI: https://doi.org/10.1186/s13063-021-05489-x

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Clinical Operations

Beyond the Intervention: Deconstructing the Science of Healthcare Improvement

Improvement science is not a discipline in search of purity. It is a field forged in the crucible of complexity.

Clinical Operations

Translating Innovation into Practice: The Silent Legal Forces Behind Clinical Quality Reform

As public health increasingly intersects with clinical care, the ability to scale proven interventions becomes a core competency.

Read More Articles

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.

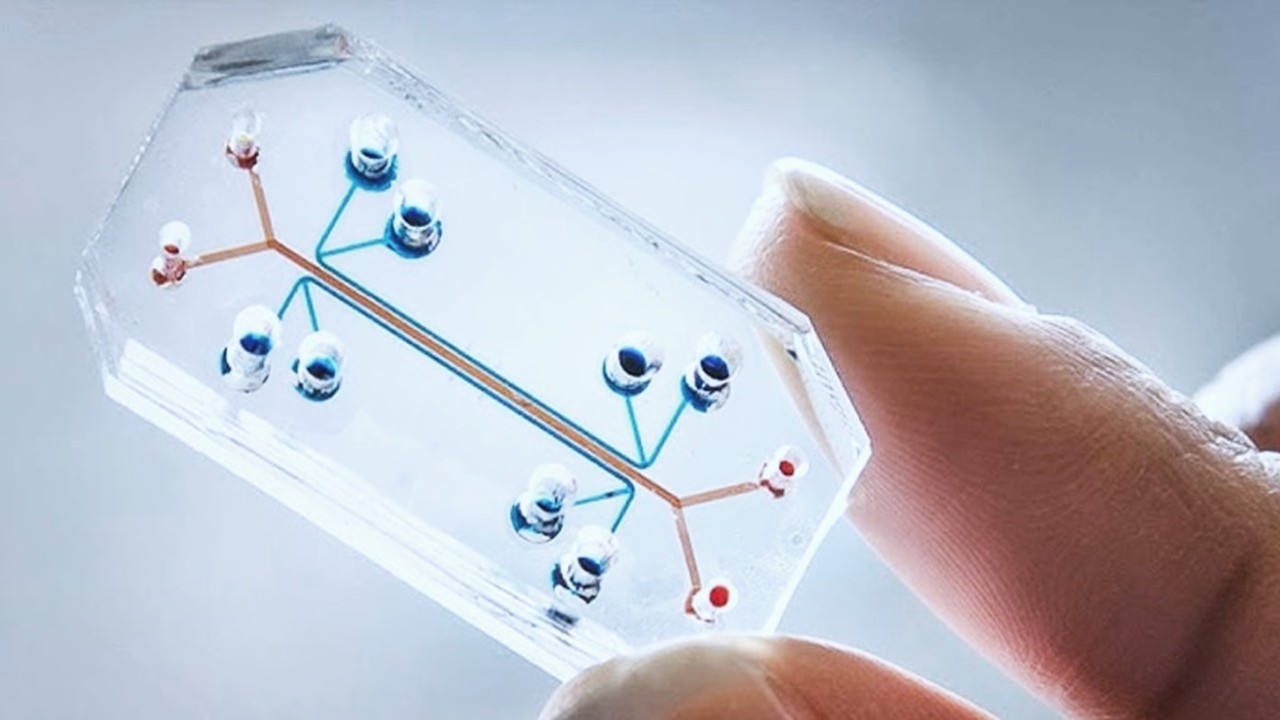

Designing Better Sugar Stoppers: Engineering Selective α-Glucosidase Inhibitors via Fragment-Based Dynamic Chemistry

One of the most pressing challenges in anti-diabetic therapy is reducing the unpleasant and often debilitating gastrointestinal side effects that accompany α-amylase inhibition.