Drug discovery has been one of the most challenging and labour intensive processes in pharmacology for the entirety of human history. The preclinical assessment of new chemical formulations for biological activity and ensuring that their targeted therapeutic function works as intended is a big enough hurdle of its own before a substance even moves on to safety assessment and clinical trials.

A Brief History of Drug Discovery

Previously, the process of drug discovery relied much more heavily on serendipity and an older version of what we could call Phenotypic Drug Discovery today. Phenotypic assessments of active ingredients assess the effect of a substance externally, without detailed knowledge of its internal mechanism of action. A large number of pharmaceuticals originated from the plant kingdom, and their discovery was largely driven by the observation of their effects – indeed, understanding modes of action was not a consideration. Many plant-derived drugs are still in use today, such as the painkiller salicylic acid from willow bark, or digoxin, a cardiac glycoside derived from foxglove.

Naturally, while analysing plants for therapeutic agents does remain a valid form of inquiry today, it is not how the majority of modern drug discovery happens. Indeed, just as salicylic acid gave rise to an entire class of painkillers (the non-steroidal anti-inflammatory drugs), so did drug discovery move past the finite resource of different plant species on the planet. This process began with the development of chemical analogues of already established drugs. This then gave way to mechanistically driven drug discovery, which involves the investigation of massive databases of chemical compounds that are known to interact with a biological reaction or pathway (target) of interest. While all three methods remain in some use today, mechanistically driven drug discovery is the most capital intensive, and the most well known.

Modern Drug Discovery

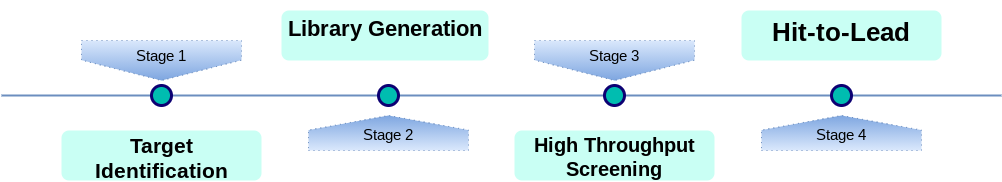

This process of drug discovery has benefited from advances in computing throughout the 20th and 21st century – giving rise to the field of combinatorial chemistry. Combinatorial chemistry in pharmacological drug discovery works by identifying targets on which substances act, and then classifying their mode of action on the target – either as positive (activators, potentiators, agonists) or negative (inhibitors, blockers, antagonists). Afterwards, large chemical libraries of candidate drugs are developed, which are subsequently analysed for efficacy.

Much of this work is done in silico using computational chemistry, as it is far more cost and time efficient to perform analyses compared to real-time experimental investigations. This does come with obvious trade-offs – the difficulty in synthesising compounds which may be found to interact with a target needs to be ascertained, and real screening still has to be done for any identified candidates.

A Future View of QC and Drug Discovery

This is why Quantum Computing (QC) is such a promising development for the area. While classical computing is limited to storing and processing information in the form of bits that are either on or off, quantum computing uses qubits, which can be on, off, or both. Additionally, quantum computing also benefits from harnessing the advantage of quantum entanglement. This results in quantum computers offering magnitudes more computing power and speed than any classical system is capable of.

The computing power required to simulate interactions between targets and drug candidates scales exponentially with the number of electrons in the compound to produce exact simulations, although trade-offs can be made to increase speed, at the cost of accuracy. While this is obviously time-consuming even for traditional small molecule drugs, it becomes a herculean task for more complex compounds.

Quantum computing can also play a part in a double-pronged approach in reducing the computing power required. Artificial Intelligence has already seen huge growth in the areas of drug discovery, both for chemical simulations as well as compound synthesis, particularly through machine learning. Machine learning by itself can reduce the computational power required for these complex simulations exponentially; combined with quantum computing, the time gains would be phenomenal.

Current Developments in QC and Drug Discovery

The world of quantum computing is already making waves in society, even though fault-tolerant quantum processors remain a distant development. Such processors would be capable of correcting errors faster than they are created and therefore withstand noise. However, pharmacology can expect to reap the benefits of new technologies long before then. Noisy-Intermediate Scale Quantum (NISQ) algorithms are a much more feasible target. In fact, British firm Cambridge Quantum Computing (CQC) partnered with Roche to develop and implement NISQs to accelerate research efforts by Roche. CQC has also developed its own platform for quantum computational Chemistry, known as EUMEN.

Another company that has made developments in the area has been ProteinQure, which has made a partnership with AstraZeneca. ProteinQure focuses on developing machine learning and quantum computing technologies for the discovery of peptide based drugs and antibodies, both of which are classes of therapeutics that require intensive chemical simulations. Quantum computing promises to enable pharmaceutical companies to design novel, complex structures that could perhaps reach targets that are not typically feasible for traditional pharmaceutics. The cost of quantum computing is not expected to be low – particularly while the technology remains new. However, quantum computing can be combined with cloud computing which would enable a wider array of companies to take advantage of its capabilities, as well as reinforce its adoption rates.

Similarly, the current cost of drug discovery and development runs from hundreds of millions to billions of dollars, with gargantuan investments required to bring a new drug to market, as well as a development time of ~10 years. The average development cost of drugs (for which data was publicly accessible) approved by the FDA from 2009 to 2018 was $985 million. This figure increases exponentially for particularly complex treatments, such as those for cancer. As such, the benefits that quantum computing could bring to such a large market, both in terms of time and money, should not be discounted.

While quantum computing may not yet be a mature technology, development in the sector is happening at a rapid pace. Pharmaceutical companies should take note to keep on top of these developments, as it is already making an impact on drug discovery and preclinical drug development.

Subscribe

to get our

LATEST NEWS

Related Posts

Molecular Biology & Biotechnology

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.

Drug Discovery Biology

Unlocking GPCR Mysteries: How Surface Plasmon Resonance Fragment Screening Revolutionizes Drug Discovery for Membrane Proteins

Surface plasmon resonance has emerged as a cornerstone of fragment-based drug discovery, particularly for GPCRs.