In an era marked by a burgeoning reliance on chemicals across industries, the imperative to reliably predict toxicity has never been greater. The Tox21 program, initiated in 2008 as a collaborative effort among U.S. government agencies, offers a glimpse into the future of toxicity prediction. With the involvement of esteemed institutions like the Environmental Protection Agency (EPA) and the U.S. Food and Drug Administration (FDA), this program aims to revolutionize the way we assess the safety of chemicals. The Tox21 program leverages high-throughput screening (HTS) methods to analyze the biochemical activity of diverse substances, not only reducing time and costs but also addressing ethical concerns. Alongside Tox21, the EPA’s Toxicity Forecaster (ToxCast) program is extending the frontier of toxicity prediction by profiling substances that may harm both human health and the environment, thus contributing to the regulation of environmental pollutants.

Advancing ADME/Tox Prediction

Understanding how chemicals interact with the human body, encompassing processes like absorption, distribution, metabolism, and excretion (ADME), stands as a foundational pillar in pharmaceutical and toxicological research. However, the intricacies of predicting the interplay between these processes, coupled with the potential for toxicity (ADMET), present a formidable challenge. Factors like a compound’s solubility, membrane permeability, concentration, and partition coefficients all contribute to the complex puzzle of absorption within the human system. Traditionally, researchers have relied on laborious and resource-intensive methods to decipher these processes. Yet, the advent of the Tox21 and ToxCast programs has revolutionized this landscape. The extensive and diverse datasets they provide have become invaluable assets in training artificial intelligence (AI) algorithms, which hold the promise of efficiently predicting toxic effects. This technological leap is poised to transform the drug discovery process, offering a more streamlined and cost-effective approach to identifying potential safety concerns early in the development pipeline, ultimately benefitting both patients and the pharmaceutical industry.

In this era of AI-driven predictive toxicology, the potential to unravel the intricate web of ADME and ADMET processes is at our fingertips. AI algorithms, fueled by the wealth of data from Tox21 and ToxCast, possess the capability to navigate the labyrinth of factors influencing a compound’s behavior within the human body. By swiftly and accurately predicting toxicity, these algorithms offer a transformative solution to a historically complex challenge. Drug development stands to gain significantly from this progress, with the ability to identify problematic compounds at an earlier stage, reducing costs and expediting the delivery of safer pharmaceuticals to patients. The integration of AI into toxicology heralds a new era where predictive accuracy meets efficiency, promising a brighter and safer future for drug discovery and chemical safety evaluation.

Predicting Hepatotoxicity

Drug-induced liver injury (DILI) is undeniably a paramount safety concern within the realm of drug development. The liver plays a central role in metabolizing drugs, making it a crucial organ to monitor for potential toxicity. Hepatotoxicity, or liver damage caused by drugs, can lead to the discontinuation of promising drug candidates or, in severe cases, the withdrawal of approved medications from the market. Traditionally, the assessment of hepatotoxicity relied heavily on costly and time-consuming in vitro and in vivo studies. These conventional methods not only posed logistical challenges but also hindered the efficiency of drug development pipelines. However, the emergence of AI-driven computational methods has injected new vitality into this landscape. Leveraging vast datasets and sophisticated algorithms, these AI tools can rapidly and accurately predict the likelihood of DILI, allowing researchers to proactively identify potential liver-related issues in drug candidates. This transformative shift holds the promise of significantly reducing the costs and time associated with drug development, while also enhancing patient safety by minimizing the risks of hepatotoxicity in new medications.

Tackling Cardiotoxicity Challenges

Cardiotoxicity stands as a pivotal concern in the pharmaceutical industry, given its potential to derail the development and approval of promising drugs. Regulatory guidelines, notably the International Conference of Harmonization’s guideline (S7B), mandate a rigorous preclinical evaluation of drug candidates to assess their propensity to inhibit hERG (Ether-à-go-go) activities. These hERG channels, also known as Ether-à-go-go (EAG) proteins, are potassium channels that are not confined to the heart but are distributed throughout various tissues, including the brain, endocrine cells, and muscles. Their critical role lies in regulating cardiac function by facilitating the electrical activity of the heart. However, when these channels are blocked by small molecules found in certain drugs, it can lead to a potentially life-threatening condition known as QT interval prolongation. This lengthening of the QT interval in the heart’s electrical cycle can trigger arrhythmias and, in severe cases, lethal cardiotoxicity. Hence, ensuring that drug candidates exhibit minimal inhibition of hERG channels is a non-negotiable imperative to circumvent these deleterious effects and enhance drug safety.

The mandatory preclinical examination of drug candidates for hERG channel inhibition is a safeguard to identify and mitigate cardiotoxicity risks early in the drug development process. It underscores the pharmaceutical industry’s commitment to patient safety and ensures that only medications with a favorable cardiac safety profile advance to clinical trials and regulatory evaluations. The knowledge gained from these assessments not only aids in selecting safer drug candidates but also informs strategies for optimizing drug design and formulation to minimize the risk of cardiotoxicity. As such, this rigorous evaluation process is a critical component of the pharmaceutical industry’s efforts to bring innovative and life-saving medications to market while safeguarding patients from potential cardiac side effects.

The Hunt for Mutagenicity and Carcinogenicity

Mutagenicity and carcinogenicity assessments are pivotal cornerstones in the comprehensive risk evaluation of chemicals and pharmaceutical compounds. Mutagenicity, a property that pertains to a substance’s capacity to induce genetic mutations, holds significant implications as it can potentially lead to a wide array of disorders, including cancer. In tandem, carcinogenicity represents the compound’s potential to initiate or promote cancer development. Given the well-established link between genetic mutations and cancer, the meticulous evaluation of mutagenicity and carcinogenicity is paramount in safeguarding public health and minimizing the global burden of cancer.

However, the task of assessing these attributes presents multifaceted challenges. Mutagenicity assessment, for instance, has encountered hurdles such as discrepancies in results from the Ames test, a common method for evaluating mutagenic potential. These inconsistencies can lead to both false positives and false negatives, further complicating the identification of genuinely mutagenic compounds. Moreover, the reproducibility of findings across different laboratories can be challenging, contributing to uncertainty in the assessment process. Carcinogenicity studies, as outlined by the International Council for Harmonisation (ICH) guidelines, are notoriously resource-intensive, involving two years of experimentation with hundreds of rodents whose costs can amount to millions of dollars. This laborious and costly nature of traditional carcinogenicity testing underscores the need for more efficient and cost-effective alternatives.

In response to these challenges, in silico predictive methods have gained substantial traction in recent years. Leveraging the power of computational modeling and artificial intelligence (AI), these methods offer a promising avenue for assessing mutagenicity and carcinogenicity. Machine learning (ML) approaches, in particular, have emerged as valuable tools to enhance efficiency and precision in predictive toxicology. By analyzing vast datasets and identifying complex patterns, ML models can make informed predictions about the mutagenic and carcinogenic potential of compounds, streamlining the evaluation process and reducing the dependence on resource-intensive animal testing. These developments mark a significant shift toward more efficient and ethically sound approaches to assessing the safety of chemicals and pharmaceuticals, ultimately benefiting public health and advancing the field of toxicology.

In conclusion, the Tox21 and ToxCast programs have ushered in a new era of toxicity prediction, underpinned by AI and computational methodologies. These initiatives have the potential to reshape drug development, making it more efficient, cost-effective, and, most importantly, safer for patients and the environment. As we continue to unlock the power of AI in predicting toxicity, the future of chemical safety looks increasingly promising.

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Medicinal Chemistry & Pharmacology

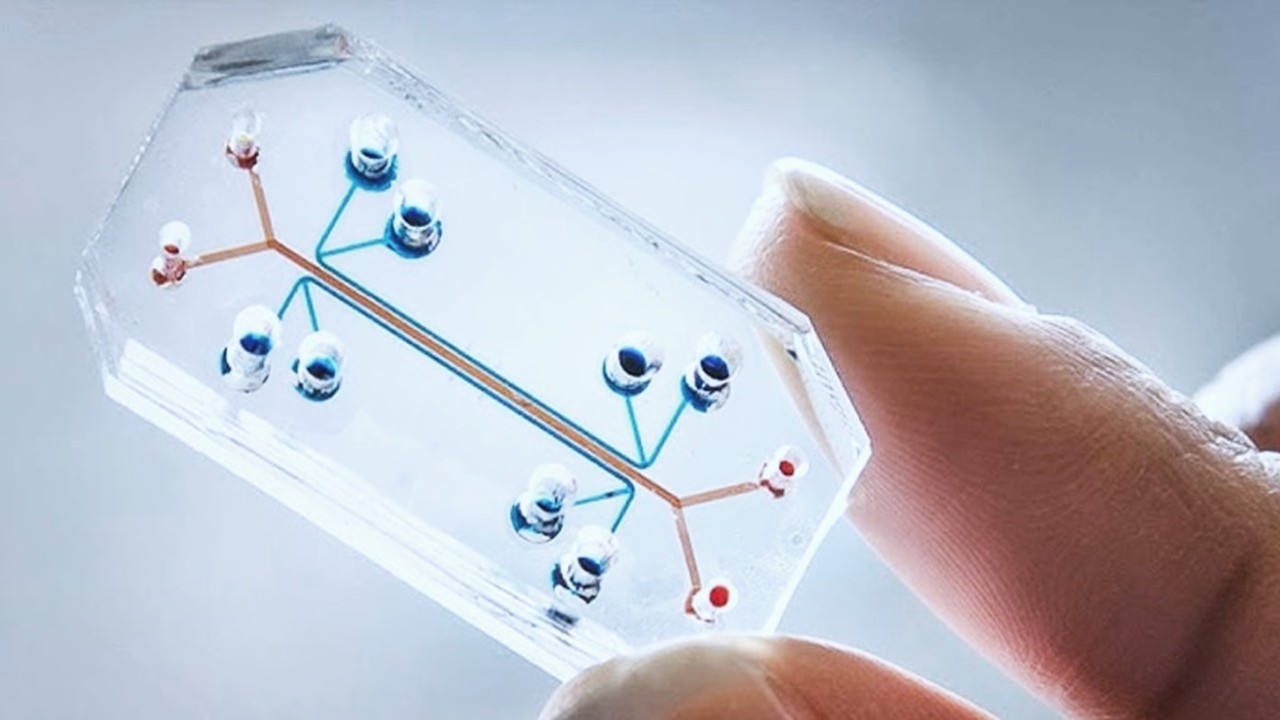

Invisible Couriers: How Lab-on-Chip Technologies Are Rewriting the Future of Disease Diagnosis

The shift from benchtop Western blots to on-chip, real-time protein detection represents more than just technical progress—it is a shift in epistemology.

Medicinal Chemistry & Pharmacology

Designing Better Sugar Stoppers: Engineering Selective α-Glucosidase Inhibitors via Fragment-Based Dynamic Chemistry

One of the most pressing challenges in anti-diabetic therapy is reducing the unpleasant and often debilitating gastrointestinal side effects that accompany α-amylase inhibition.

Medicinal Chemistry & Pharmacology

Into the Genomic Unknown: The Hunt for Drug Targets in the Human Proteome’s Blind Spots

The proteomic darkness is not empty. It is rich with uncharacterized function, latent therapeutic potential, and untapped biological narratives.

Medicinal Chemistry & Pharmacology

Aerogel Pharmaceutics Reimagined: How Chitosan-Based Aerogels and Hybrid Computational Models Are Reshaping Nasal Drug Delivery Systems

Simulating with precision and formulating with insight, the future of pharmacology becomes not just predictive but programmable, one cell at a time.

Read More Articles

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.