Redefining Clinical Research Through Generative AI

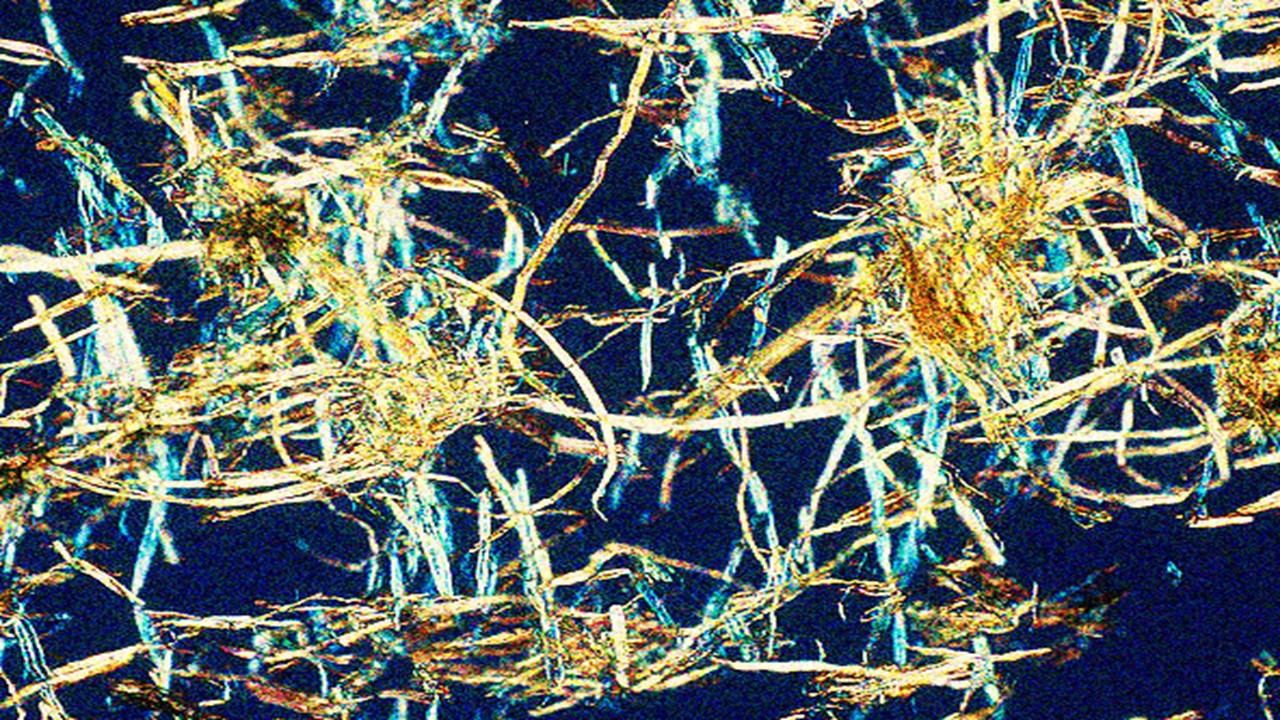

The integration of generative artificial intelligence into clinical research heralds a paradigm shift, merging computational creativity with scientific rigor. Unlike traditional AI, which analyzes existing data to predict outcomes, generative AI synthesizes novel data—from molecular structures to synthetic patient cohorts—offering unprecedented tools for innovation. This capability enables researchers to simulate clinical trial scenarios, optimize protocols, and accelerate the design of studies that might otherwise take years to conceptualize. By generating synthetic datasets, these models circumvent bottlenecks related to data scarcity, allowing for robust hypothesis testing without compromising patient privacy.

Administrative efficiencies represent one of the most immediate applications. Drafting trial protocols, informed consent forms, and regulatory documents—tasks historically mired in labor-intensive processes—can now be streamlined through AI-generated templates. These tools ensure consistency while reducing human error, freeing researchers to focus on higher-order analytical challenges. Participant engagement, another critical hurdle, is similarly transformed. AI-driven chatbots and avatars personalize communication, tailoring recruitment strategies and consent processes to individual comprehension levels. This fosters inclusivity, particularly in underrepresented communities where traditional methods often fail to resonate.

Beyond incremental improvements, generative AI reimagines trial design itself. By modeling potential clinical trajectories, researchers can identify optimal endpoints, predict adverse events, and adjust protocols in real time. For example, AI-simulated patient responses to therapeutics enable adaptive trial designs that dynamically evolve based on emerging data. Such agility minimizes resource waste and enhances the likelihood of trial success. Furthermore, generative models integrate diverse data streams—wearables, imaging, electronic health records—to uncover novel biomarkers or disease progression signals, enabling earlier intervention and faster therapeutic development.

Ethical and bias mitigation strategies are also enhanced through proactive AI oversight. Generative systems can audit datasets for demographic imbalances, correct algorithmic biases in real time, and ensure equitable representation across trial populations. This self-correcting capacity is critical for maintaining the integrity of research outcomes, particularly as regulatory bodies increasingly prioritize inclusivity. However, the reliance on AI to identify biases introduces a meta-layer of complexity: ensuring the auditors themselves are free from embedded prejudices requires rigorous validation frameworks.

The transformative potential of generative AI extends to data synthesis and security. By anonymizing datasets and generating privacy-preserving synthetic analogs, these tools mitigate risks associated with sensitive health information. Enhanced data discoverability and interoperability further streamline collaboration across institutions, fostering a global ecosystem of shared knowledge. Yet, the very ability to generate realistic synthetic data demands vigilance against misuse, underscoring the need for robust governance protocols to prevent unintended breaches or ethical lapses.

Balancing Innovation with Ethical Imperatives

Generative AI’s rapid adoption in clinical settings introduces ethical quandaries that mirror its technical complexity. The phenomenon of “hallucinations”—in which models produce plausible but factually incorrect outputs—poses significant risks in trial documentation or diagnostic support. A single erroneous AI-generated conclusion could derail years of research or compromise patient safety, necessitating human oversight at every stage. Similarly, the technology’s capacity to recreate training data raises concerns about inadvertent leaks of protected health information, even from anonymized datasets.

Participant autonomy emerges as a central ethical challenge. While AI-driven engagement tools improve retention and communication, their persuasive capabilities risk crossing into coercion. Chatbots tailored to exploit psychological cues might unduly influence participation decisions, undermining the voluntariness foundational to informed consent. This tension between engagement efficacy and ethical responsibility requires clear boundaries, potentially enforced through algorithmic transparency and third-party audits.

Bias propagation remains a persistent threat. Generative models trained on historically skewed datasets risk perpetuating disparities in diagnosis, treatment allocation, or trial eligibility. For instance, an AI prioritizing certain demographics in recruitment based on outdated clinical guidelines could exacerbate existing inequities. Mitigating this demands diverse training data and continuous monitoring, yet the opacity of many AI systems complicates accountability. Researchers must balance the urgency of innovation with deliberate efforts to audit and rectify biases at both developmental and operational stages.

Technical limitations further complicate ethical deployment. Generative AI’s performance fluctuates based on data quality, often degrading when applied to underrepresented populations or rare diseases. This “generalizability gap” threatens the external validity of trials, particularly in global health contexts where local data may be sparse. Ensuring models are validated across diverse cohorts is essential, but resource-intensive. Collaborative frameworks for data sharing and model testing—particularly with low-income regions—could democratize access while enhancing robustness.

The dual-use nature of generative AI amplifies these challenges. While synthesizing data for drug discovery, the same tools could theoretically engineer harmful compounds or manipulate clinical findings. Regulatory bodies must preemptively address misuse through stringent licensing, real-time monitoring, and international cooperation. Establishing ethical guardrails without stifling innovation will require adaptive policies that evolve alongside the technology itself.

Regulatory Frameworks in the Age of AI-Driven Discovery

Current regulatory landscapes are ill-equipped to manage the fluidity of generative AI. Agencies like the FDA and NIH have begun drafting guidelines, but existing frameworks often conflate AI as a therapeutic intervention versus a research tool. Clarifying this distinction is critical: oversight for AI-driven diagnostics demands different rigor than AI-optimized trial logistics. The FDA’s recent establishment of the CDER AI Council marks progress, emphasizing harmonized standards for AI-enabled medical products. Yet, global consistency remains elusive, risking fragmented adoption.

International consensus guidelines, such as SPIRIT-AI and CONSORT-AI, provide foundational standards for protocol development and reporting. These frameworks prioritize transparency in training data, model architecture, and performance metrics—elements essential for replicability. However, their voluntary nature limits enforcement, and adoption lags behind technological advances. Mandating adherence through funding requirements or publication standards could accelerate compliance, though resistance from proprietary-focused entities poses a hurdle.

Risk stratification must guide regulatory priorities. Low-stakes applications, like automated document generation, warrant streamlined approval processes to avoid stifling efficiency gains. Conversely, AI tools influencing clinical endpoints or patient eligibility require rigorous validation, akin to pharmaceutical trials. Dynamic regulatory approaches, where oversight escalates with potential harm, could balance safety with agility. For example, real-world performance monitoring post-deployment—algorithmovigilance—complements premarket evaluations, ensuring models adapt to evolving clinical contexts.

Intellectual property conflicts further complicate regulation. Open-source AI platforms democratize access but risk inconsistent quality control, while proprietary systems may hoard data critical for independent validation. Hybrid models, where core algorithms are transparent but proprietary enhancements remain protected, could satisfy both innovation and accountability. Regulatory bodies must also address data sovereignty issues, particularly when multinational trials utilize AI trained on globally sourced datasets.

The iterative nature of AI development demands equally adaptive governance. Static regulations risk obsolescence as models evolve. Collaborative forums, uniting regulators, ethicists, and developers, can foster anticipatory guidance—preemptively addressing emergent risks like deepfakes in patient consent videos or synthetic data contamination. International treaties, similar to climate accords, may be necessary to enforce ethical standards across borders, ensuring generative AI serves as a unifying force in global health rather than a divisive tool.

Building Trust in Algorithmic Decision-Making

Trust in generative AI hinges on transparency, reliability, and inclusivity. The National Institute of Standards and Technology outlines six pillars of trustworthy AI: validity, safety, accountability, explainability, privacy, and fairness. Achieving these in clinical research requires dismantling the “black box” perception of AI through explainable models that delineate decision pathways. For instance, AI-generated trial recommendations should be accompanied by confidence intervals and data lineage reports, enabling researchers to assess algorithmic reasoning.

Participant trust is equally paramount. Communities historically marginalized in clinical research—particularly racial minorities and low-income populations—may view AI with skepticism, fearing perpetuation of systemic biases. Engaging these groups through community advisory boards ensures AI tools are co-designed with end-users, embedding cultural competence into algorithms. Demonstrating tangible benefits, such as AI-driven recruitment strategies that prioritize inclusivity, can rebuild trust while advancing equity.

Transparency extends beyond technical audits to encompass communication. Participants interacting with AI interfaces should be informed of the technology’s role, limitations, and data usage in accessible language. Informed consent processes must clarify whether AI influences trial design or personalizes interventions, avoiding deceptive anthropomorphization of chatbots. Institutional review boards, traditionally focused on human-subject risks, now require expertise to evaluate AI’s ethical implications, necessitating interdisciplinary training.

Independent auditing frameworks provide another trust cornerstone. Third-party evaluators can assess models for bias, security vulnerabilities, and adherence to ethical guidelines pre- and post-deployment. The proposed three-layered audit system—evaluating governance, model performance, and application-specific outputs—offers a holistic approach. Publicly accessible audit results, anonymized to protect proprietary details, foster accountability while educating the broader research community.

Ultimately, trust is cultivated through consistency. AI systems must demonstrate reliability across diverse populations and evolving medical knowledge. Continuous monitoring pipelines, paired with rapid error-correction mechanisms, sustain confidence as models adapt. This dynamic trust-building mirrors the scientific method itself—iterative, evidence-based, and open to scrutiny—aligning AI’s trajectory with the foundational ethos of clinical research.

Strategic Roadmaps for AI Integration

Operationalizing generative AI in clinical research demands systematic process mapping. The Dilts and Sandler framework, which dissects clinical trials into 700+ steps, identifies AI’s role in automating administrative tasks (e.g., document translation) and redefining core processes (e.g., dynamic consent). Prioritizing low-risk, high-impact applications—such as AI-generated patient newsletters—builds institutional familiarity while delivering immediate efficiencies.

Cross-sector collaboration accelerates responsible adoption. Pharma companies, academic institutions, and regulatory bodies must co-develop benchmarks for AI validation, sharing datasets and tools through open-access platforms. Initiatives like the AllianceChicago study, where AI chatbots improved engagement in underserved communities, exemplify how pilot projects can demonstrate feasibility while addressing ethical concerns. Such collaborations also distribute costs, mitigating financial barriers for smaller entities.

Education forms another strategic pillar. Clinicians and researchers need training in AI literacy—understanding model limitations, interpreting outputs, and recognizing ethical red flags. Certification programs, integrated into medical curricula and continuing education, can standardize competencies. Simultaneously, AI developers must immerse themselves in clinical workflows to avoid solutionism, ensuring tools address genuine needs rather than hypothetical efficiencies.

Regulatory agility is critical. “Sandbox” environments, where AI tools are tested under provisional approvals, allow real-world validation without compromising safety. These controlled settings enable regulators to observe performance, iterate on guidelines, and identify unforeseen risks. Parallel advancements in AI-specific insurance models could mitigate liability concerns, encouraging innovation while protecting participants.

Lastly, global equity must anchor integration strategies. Low-resource settings, often excluded from AI innovation, stand to benefit enormously from generative tools that compensate for infrastructural gaps. International partnerships, funded by high-income countries and NGOs, can deploy AI-driven trial platforms in regions lacking traditional research infrastructure. This democratizes participation, enriches dataset diversity, and aligns with ethical imperatives for inclusive progress.

Toward an Equitable Future in Medical Innovation

Generative AI’s promise in clinical research is tempered by the imperative to prioritize equity. Current trials frequently underrepresent women, ethnic minorities, and socioeconomically disadvantaged groups—a disparity AI could exacerbate or alleviate. Proactive measures, such as bias-aware recruitment algorithms and synthetic data augmentation for rare demographics, can counteract historical exclusion. However, these require deliberate design choices, underscoring the need for diverse teams in AI development.

Community-centric AI design ensures technologies reflect the values and needs of end-users. Participatory frameworks, where patients co-create engagement tools or consent processes, embed equity into the research lifecycle. This approach also educates communities about AI’s role, demystifying its operations and fostering grassroots advocacy for ethical practices.

Global governance must counteract the concentration of AI expertise in high-income countries. International funding pools, supporting AI infrastructure in low-resource regions, prevent a “digital divide” in clinical research. Open-source repositories for AI models, paired with capacity-building initiatives, empower local researchers to adapt tools to regional health priorities—from malaria to maternal mortality.

The path forward demands vigilance. As generative AI permeates clinical research, continuous evaluation of its societal impact is non-negotiable. Longitudinal studies tracking AI’s influence on trial diversity, patient outcomes, and healthcare disparities will inform iterative refinements. Ethicists and technologists must collaborate to anticipate emergent dilemmas, ensuring today’s innovations don’t become tomorrow’s inequities.

In conclusion, generative AI stands at the nexus of scientific ambition and ethical responsibility. Its capacity to redefine clinical research is unparalleled, yet realizing this potential requires a commitment to transparency, inclusivity, and adaptive governance. By embedding these principles into every algorithm and collaboration, the scientific community can harness AI not merely as a tool, but as a catalyst for a more equitable and transformative era in medicine.

Study DOI: https://doi.org/10.1016/j.jacadv.2025.101593

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Clinical Operations

Beyond the Intervention: Deconstructing the Science of Healthcare Improvement

Improvement science is not a discipline in search of purity. It is a field forged in the crucible of complexity.

Clinical Operations

Translating Innovation into Practice: The Silent Legal Forces Behind Clinical Quality Reform

As public health increasingly intersects with clinical care, the ability to scale proven interventions becomes a core competency.

Read More Articles

Aerogel Pharmaceutics Reimagined: How Chitosan-Based Aerogels and Hybrid Computational Models Are Reshaping Nasal Drug Delivery Systems

Simulating with precision and formulating with insight, the future of pharmacology becomes not just predictive but programmable, one cell at a time.

Coprocessed for Compression: Reengineering Metformin Hydrochloride with Hydroxypropyl Cellulose via Coprecipitation for Direct Compression Enhancement

In manufacturing, minimizing granulation lines, drying tunnels, and multiple milling stages reduces equipment costs, process footprint, and energy consumption.