Search for Synthetic Accessibility in Drug Discovery

The synthesis of drug-like molecules remains a pivotal challenge in pharmaceutical research, where the balance between molecular innovation and practical feasibility dictates the trajectory of drug development. Synthetic accessibility (SA) serves as a critical metric, influencing decisions from hit selection to lead optimization. Yet, quantifying this parameter has historically relied on the subjective judgment of seasoned chemists, introducing variability rooted in individual expertise and experience. This variability becomes particularly problematic when prioritizing thousands of molecules in high-throughput screening or de novo design, necessitating a standardized, computational approach.

Traditional methods for assessing SA fall into two categories: complexity-based algorithms and retrosynthetic analysis. Complexity-based models penalize intricate structural features—spiro rings, stereocenters, or macrocycles—while retrosynthetic tools simulate multi-step synthesis pathways. The latter, though theoretically robust, demands exhaustive reaction databases and computational resources, rendering them impractical for large-scale applications. This gap underscores the need for a hybrid model that marries historical synthetic data with molecular complexity metrics, offering both speed and accuracy.

Enter the synthetic accessibility score (SAscore), a novel framework designed to harmonize these competing priorities. By leveraging structural patterns from millions of synthesized compounds and integrating complexity-driven penalties, the SAscore system provides a scalable solution for ranking molecules. Its development reflects a paradigm shift, embedding centuries of synthetic knowledge into an algorithmic format that transcends individual bias, thereby streamlining early-stage drug discovery.

Bridging Historical Knowledge and Molecular Complexity

At the core of SAscore lies a dual-component architecture: fragment contributions and molecular complexity. The fragment score derives from statistical analysis of over one million PubChem structures, encoding the frequency of substructures as proxies for synthetic tractability. Common fragments—methyl groups, aromatic rings, or ester linkages—receive favorable scores, reflecting their prevalence in existing compounds. Rare or exotic motifs, by contrast, signal synthetic challenges, penalizing molecules that deviate from established chemical “building blocks.”

Molecular complexity, the second pillar, quantifies structural intricacies that hinder synthesis. Ring systems, stereochemistry, and macrocyclic frameworks are evaluated through a series of weighted penalties. Spiro rings and nonstandard ring fusions amplify complexity, as do molecules exceeding 50 atoms. StereoComplexity penalizes compounds with multiple stereocenters, while macrocycles—rings with eight or more members—incur additional penalties. These metrics collectively mimic a chemist’s intuitive assessment, translating qualitative judgments into quantifiable terms.

The integration of these components—fragment frequency and complexity—yields a score between 1 (easily synthesizable) and 10 (prohibitively complex). This synthesis of historical data and structural analysis ensures that SAscore captures both the “art” and “science” of synthetic chemistry, offering a holistic view of molecular feasibility.

Algorithmic Foundations of SAscore

The SAscore algorithm begins by fragmenting molecules into extended connectivity fingerprints (ECFC4), which capture atom-centered substructures with up to four bond layers. For example, aspirin yields fragments highlighting its acetyloxy and benzoic acid groups. Each fragment’s contribution is weighted by its frequency in PubChem, with common motifs receiving positive scores and rare ones penalized. A logarithmic transformation normalizes these contributions, ensuring that ubiquitous fragments dominate the score.

Complexity penalties are calculated through a hierarchical framework. Ring systems are dissected to identify fused or spiro arrangements, with penalties scaling nonlinearly to reflect escalating synthetic difficulty. Stereocenters and macrocycles contribute additive penalties, while molecule size imposes a linear penalty beyond 50 atoms. These penalties are subtracted from the fragment score, and the result is scaled to the 1–10 range.

Implementation leverages cheminformatics tools like Pipeline Pilot, enabling rapid processing of large datasets. The algorithm’s modular design allows adaptation across platforms, ensuring compatibility with diverse drug discovery workflows. By avoiding reliance on proprietary reaction databases, SAscore remains both agile and accessible, democratizing synthetic feasibility analysis for academic and industrial researchers alike.

Validation Through Expert Consensus

To validate SAscore, 40 molecules spanning a range of sizes and complexities were evaluated by nine medicinal chemists. Participants ranked each compound on a scale of 1–10, with their averaged scores compared to SAscore predictions. The results revealed striking concordance: SAscore explained nearly 90% of the variance in human assessments, demonstrating its alignment with expert intuition.

Notably, chemists exhibited high consensus for extremes—simple or highly complex molecules—but diverged on intermediates. This variability mirrors earlier studies, where individual backgrounds and project experiences colored judgments. SAscore’s consistency across this spectrum highlights its robustness, particularly in mid-range cases where human judgment falters.

The validation also illuminated SAscore’s components: complexity penalties correlated more strongly with human rankings than fragment scores alone. This suggests that while historical data informs synthetic feasibility, structural complexity remains the primary driver of perceived difficulty—a finding with profound implications for molecular design.

Case Studies and Divergent Insights

Discrepancies between SAscore and human evaluators offer valuable insights. A symmetrical molecule scored as highly complex by the algorithm but was deemed accessible by chemists, who recognized symmetry’s simplifying role. Conversely, a tetracyclic scaffold with 39,000 PubChem analogs received inflated complexity ratings from chemists, despite its prevalence suggesting synthetic tractability via Diels-Alder reactions.

These cases underscore SAscore’s strengths and limitations. While the algorithm excels at identifying rare or topologically complex motifs, it overlooks heuristic factors like symmetry or modular synthesis pathways. Future iterations may incorporate symmetry detection or reaction-aware fragment analysis to bridge this gap.

The most frequent fragments—methyl, methoxy, and aromatic rings—reflect their ubiquity in commercial building blocks and linkage reactions. Their prevalence aligns with RECAP cleavage patterns, validating SAscore’s ability to encode synthetic “common sense” without explicit reaction data.

Navigating the Limits and Future Horizons of SAscore

While SAscore represents a significant leap in computational synthetic accessibility assessment, its current iteration is not without limitations. One notable blind spot lies in its treatment of molecular symmetry. Symmetrical structures often simplify synthesis by reducing the number of unique steps or intermediates required, yet the algorithm does not currently account for this. For example, Molecule A—a symmetrical compound—received a high SAscore due to its size and ring systems, but chemists rated it as synthetically accessible, recognizing that symmetry could streamline its assembly. Future iterations aim to integrate symmetry detection modules, balancing topological complexity with the synthetic shortcuts symmetry provides. Additionally, SAscore’s reliance on static fragment libraries risks overlooking emerging synthetic methodologies. As novel reactions (e.g., photoredox catalysis or enzymatic cascades) redefine what is “synthetically feasible,” periodic updates to the PubChem-derived fragment database will be essential to maintain relevance.

Another challenge arises from the algorithm’s inability to contextualize modular synthesis pathways. While SAscore penalizes rare fragments, it does not distinguish between fragments that are inherently difficult to synthesize and those that are readily available as commercial building blocks. For instance, a complex spirocyclic core might receive a high complexity penalty, but if it can be purchased as a pre-made reagent, its actual synthetic burden diminishes. Bridging this gap would require integrating real-time data on reagent availability—a feature under exploration through partnerships with chemical supplier databases. Furthermore, the current model treats all stereocenters equally, regardless of their synthetic provenance. In practice, some stereocenters arise spontaneously during reactions (e.g., via axial chirality in cross-coupling), while others demand intricate enantioselective steps. A weighted stereocomplexity metric, informed by reaction mechanisms, could refine this aspect.

Looking ahead, the integration of machine learning (ML) offers a promising avenue for enhancing SAscore’s predictive power. Training ML models on historical synthesis data—such as reaction yields, step counts, or even failed attempts—could uncover hidden relationships between molecular features and synthetic feasibility. For example, neural networks might identify that certain ring fusions, though topologically complex, are routinely accessed via high-yield cycloadditions. Collaborative initiatives like the Open Reaction Database could provide the training data needed for such advancements. Meanwhile, hybrid approaches that combine SAscore with retrosynthetic planning tools (e.g., IBM RXN or ASKCOS) may offer a “best of both worlds” solution: rapid prioritization via SAscore followed by detailed pathway analysis for top candidates. Ultimately, the goal is not to replace chemists’ expertise but to augment it, creating a dynamic feedback loop where computational predictions inform human decisions, and human insights refine algorithmic models.

Implications for Modern Drug Development

SAscore’s utility extends across the drug discovery pipeline. In compound acquisition, it prioritizes synthetically feasible candidates, reducing attrition downstream. For hit triage, it identifies screening hits amenable to rapid analog synthesis, accelerating structure-activity relationship (SAR) exploration. In de novo design, it steers generative algorithms toward plausible structures, avoiding molecular “fantasies” divorced from synthetic reality.

Yet SAscore is no panacea. It cannot replace retrosynthetic tools for critical candidate selection, nor replicate the nuanced judgment of veteran chemists. Its true value lies in scalability—processing millions of molecules to spotlight promising candidates for further scrutiny.

As synthetic methodologies evolve, so too must SAscore. The rise of biocatalysis and flow chemistry demands periodic updates to fragment libraries and complexity metrics. By embedding adaptability into its framework, SAscore promises to remain a cornerstone of computational drug discovery, bridging the gap between innovation and practicality in the quest for new medicines.

Study DOI: https://doi.org/10.1186/1758-2946-1-8

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Medicinal Chemistry & Pharmacology

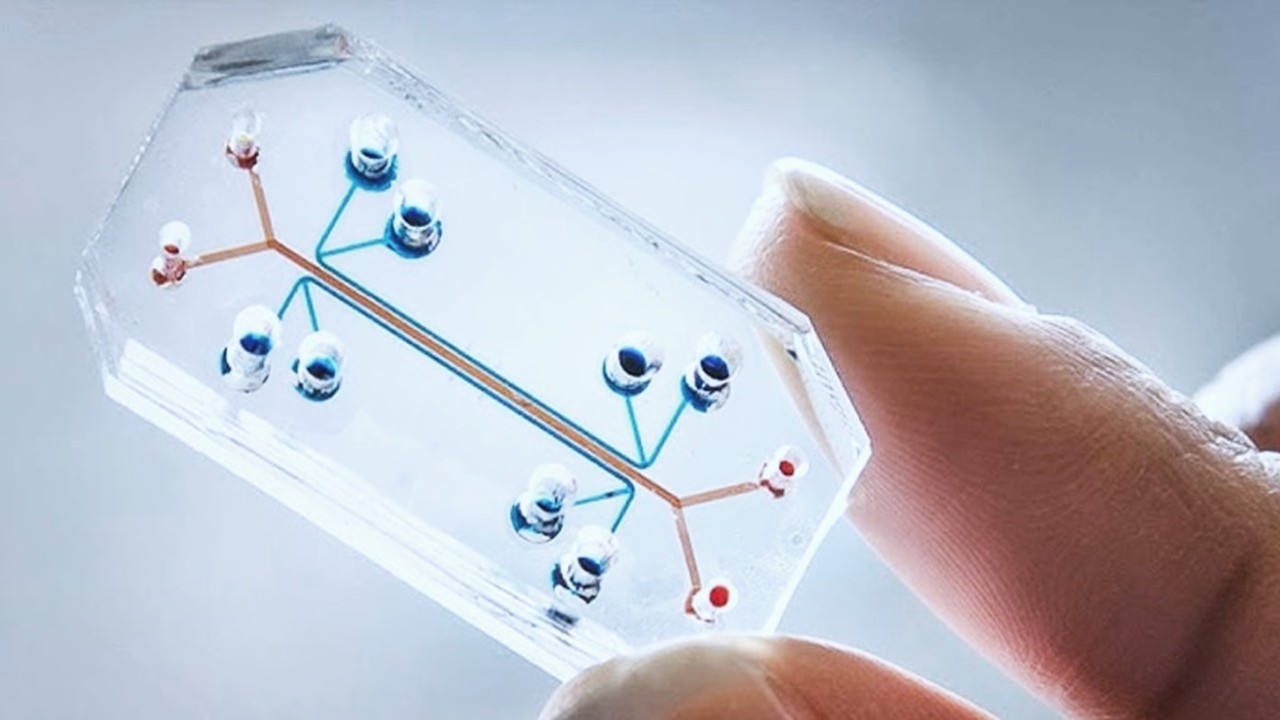

Invisible Couriers: How Lab-on-Chip Technologies Are Rewriting the Future of Disease Diagnosis

The shift from benchtop Western blots to on-chip, real-time protein detection represents more than just technical progress—it is a shift in epistemology.

Medicinal Chemistry & Pharmacology

Designing Better Sugar Stoppers: Engineering Selective α-Glucosidase Inhibitors via Fragment-Based Dynamic Chemistry

One of the most pressing challenges in anti-diabetic therapy is reducing the unpleasant and often debilitating gastrointestinal side effects that accompany α-amylase inhibition.

Medicinal Chemistry & Pharmacology

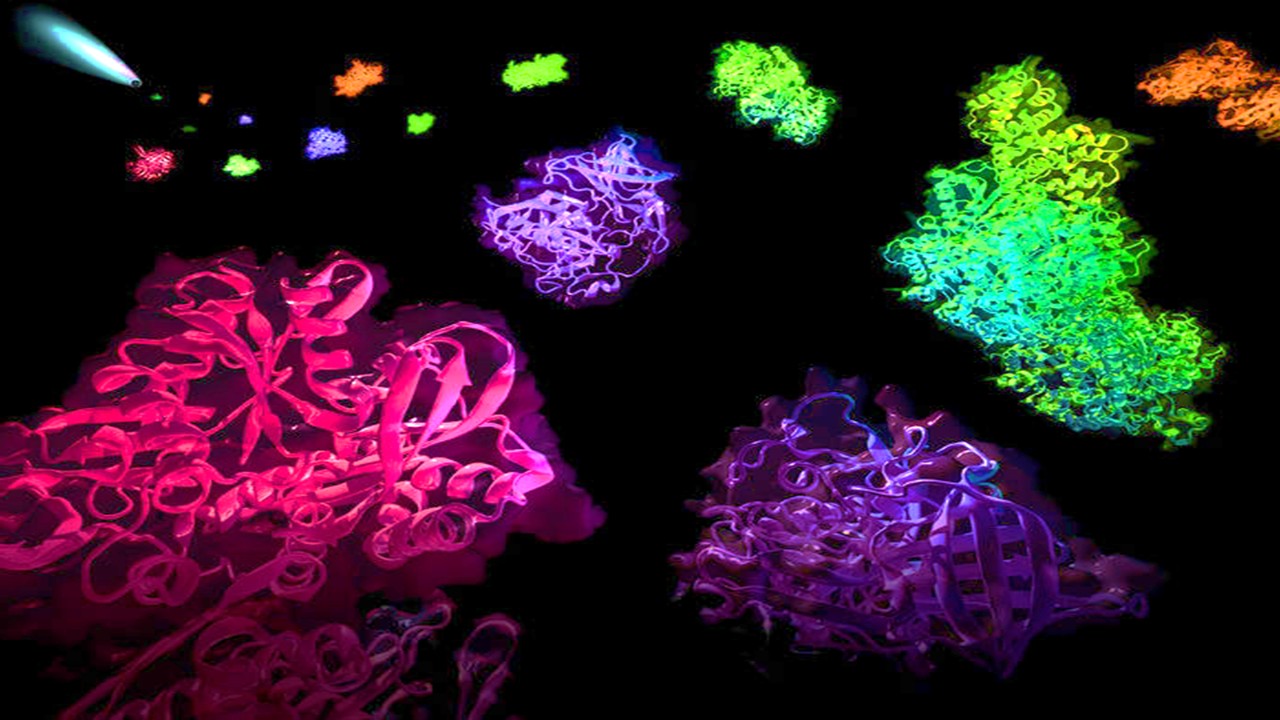

Into the Genomic Unknown: The Hunt for Drug Targets in the Human Proteome’s Blind Spots

The proteomic darkness is not empty. It is rich with uncharacterized function, latent therapeutic potential, and untapped biological narratives.

Medicinal Chemistry & Pharmacology

Aerogel Pharmaceutics Reimagined: How Chitosan-Based Aerogels and Hybrid Computational Models Are Reshaping Nasal Drug Delivery Systems

Simulating with precision and formulating with insight, the future of pharmacology becomes not just predictive but programmable, one cell at a time.

Read More Articles

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.