Emergence of AI in Clinical Research: From Theory to Practice

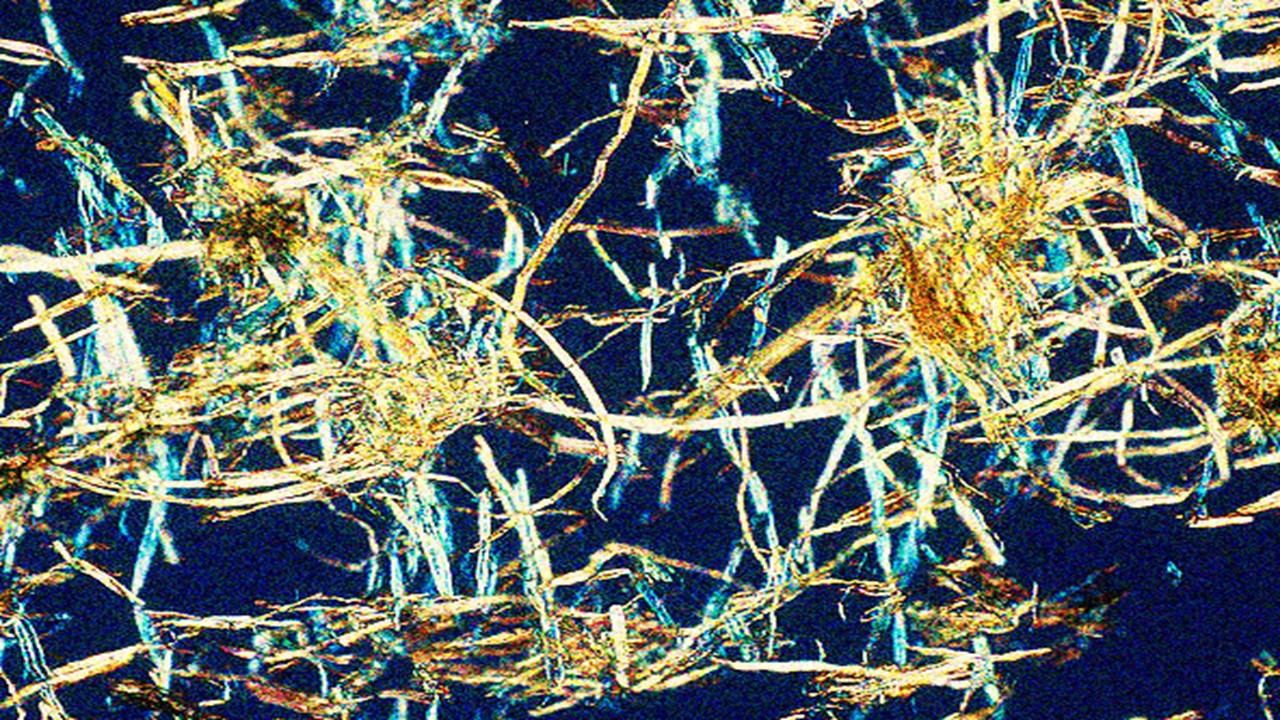

The integration of artificial intelligence (AI) into clinical trials marks a paradigm shift in how researchers approach participant recruitment and trial design. Defined by its capacity to emulate human cognitive functions—problem-solving, pattern recognition, and adaptive learning—AI spans technologies like natural language processing (NLP), machine learning (ML), and deep neural networks. These tools are increasingly deployed to parse complex datasets, from electronic health records (EHRs) to clinical trial registries, enabling rapid identification of eligible participants and real-time risk management.

Historically, clinical trials have been hampered by inefficiencies: manual screening processes, prolonged timelines, and high dropout rates. AI promises to address these challenges by automating eligibility assessments, predicting patient enrollment trajectories, and optimizing trial protocols. For instance, NLP algorithms can extract eligibility criteria from unstructured trial documents, transforming free-text entries into standardized formats. This capability was exemplified by the Clinical Trial Knowledge Base, which codified over 350,000 trials from ClinicalTrials.gov, streamlining cross-trial comparisons.

The momentum behind AI-driven recruitment is bolstered by regulatory openness. Agencies recognize AI’s potential to disrupt traditional methodologies while emphasizing the need for strategic implementation frameworks. However, the transition from experimental algorithms to real-world applications remains nascent. Early successes, such as AI tools reducing prescreening timelines from months to minutes, highlight transformative potential but also underscore the necessity of rigorous validation.

Ethical considerations loom large. As algorithms process sensitive health data, concerns about privacy breaches, algorithmic bias, and informed consent mechanisms demand proactive solutions. The absence of universal guidelines for AI deployment in trials further complicates adoption, necessitating interdisciplinary collaboration among technologists, ethicists, and clinicians.

This scoping review, encompassing 51 studies published between 2004–2023, synthesizes the current landscape of AI in trial recruitment. It reveals a field in flux—marked by technological promise, methodological heterogeneity, and unresolved ethical dilemmas—and charts a path toward responsible innovation.

Oncology Dominance and Algorithmic Diversity: Study Characteristics

Oncology emerges as the foremost domain for AI applications in clinical trials, accounting for over a quarter of reviewed studies. Cancer trials, with their complex eligibility criteria and urgent need for precision, benefit uniquely from AI’s ability to mine multimodal data—genomic profiles, imaging studies, and treatment histories. Tools like Watson for Clinical Trial Matching (WCTM) exemplify this trend, leveraging NLP to align patient data with trial protocols, thereby accelerating enrollment in precision oncology initiatives.

Geographically, the United States leads AI-driven trial innovation, contributing 72% of studies. This concentration reflects robust infrastructure for digital health research, including interoperable EHR systems and federal funding initiatives. Other regions, such as China and the European Union, show growing engagement, though scalability remains constrained by fragmented data ecosystems and regulatory variability.

Methodologically, cohort studies and non-randomized controlled trials dominate the evidence base. These designs prioritize observational data from EHRs, reflecting AI’s reliance on real-world evidence for training predictive models. However, the predominance of retrospective analyses raises questions about generalizability, particularly when algorithms are applied to populations underrepresented in training datasets.

A taxonomy of AI tools reveals four categories: commercial medical platforms (e.g., Mendel.ai), traditional statistical models, deep learning architectures, and hybrid systems. Traditional models, such as logistic regression, remain prevalent due to their interpretability, while deep learning approaches gain traction for handling unstructured data. NLP features prominently across categories, enabling extraction of clinical concepts from narrative notes and trial documents.

Despite this diversity, a critical gap persists: no reviewed study addressed participant retention. While AI excels at recruitment, its potential to mitigate attrition—through predictive analytics or remote monitoring—remains unexplored. This omission highlights a systemic focus on enrollment efficiency over long-term engagement, a blind spot in AI’s clinical trial portfolio.

Balancing Efficiency and Ethical Risk: Benefits vs. Drawbacks

AI’s value proposition in recruitment hinges on efficiency gains. By automating data extraction and eligibility screening, tools like WCTM reduce manual chart reviews by 85%, slashing prescreening timelines from weeks to hours. Such efficiency not only accelerates trial initiation but also lowers operational costs, a boon for underfunded research areas. Predictive analytics further enhance precision, identifying patients likeliest to benefit from experimental therapies while minimizing enrollment of unsuitable candidates.

Patient-centric benefits include improved access to trial opportunities. AI-powered platforms can match individuals to trials based on real-time health data, democratizing access for geographically dispersed populations. Remote consent and monitoring tools, though nascent, promise to reduce logistical burdens on participants, fostering higher satisfaction and engagement. User-friendly interfaces, such as chatbots and patient portals, bridge health literacy gaps, empowering individuals to navigate trial options autonomously.

Yet these advantages are tempered by significant drawbacks. AI’s reliance on historical data entrenches biases: algorithms trained on homogeneous populations may exclude minority groups, exacerbating health disparities. For example, models optimized for breast cancer trials often underperform in cohorts with rare subtypes or demographic variability. Data quality issues—missing entries, coding errors—compound inaccuracies, risking inappropriate exclusions or inclusions.

Algorithmic opacity poses another challenge. Deep learning models, while powerful, operate as “black boxes,” obscuring the rationale behind patient-trial matches. This lack of transparency complicates clinician buy-in and regulatory scrutiny. Furthermore, the absence of standardized validation protocols means performance metrics vary widely across studies, hindering cross-tool comparisons.

Cost presents a paradoxical barrier. While AI reduces long-term expenses, initial investments in infrastructure and training deter resource-limited institutions. Ethical quandaries, such as data ownership and informed consent in AI-augmented trials, further stall adoption. These issues underscore the need for balanced frameworks that harness AI’s efficiency without compromising equity or accountability.

The Black Box Conundrum: Technical Facilitators and Barriers

Technically, AI’s strengths lie in its scalability and adaptability. Machine learning models, particularly deep neural networks, thrive on large datasets, with performance improving logarithmically as data volume grows. Tools like CogStack demonstrate this by filtering ICU patient data in real time, flagging eligibility for sepsis trials via continuous EHR analysis. Such systems not only enhance recruitment precision but also enable dynamic protocol adjustments based on emerging data trends.

Interoperability with existing clinical systems is a key facilitator. Platforms integrating directly with EHRs minimize workflow disruptions, allowing clinicians to access AI recommendations within familiar interfaces. For example, algorithms embedded in oncology EHRs automatically alert providers to matching trials during patient visits, seamlessly blending AI into clinical decision-making. NLP further enhances interoperability by parsing unstructured notes, radiology reports, and genomic data into structured formats amenable to algorithmic processing.

However, technical barriers persist. The “black box” nature of deep learning complicates error detection and correction. When an AI system erroneously excludes eligible patients, tracing the fault requires labor-intensive audits of neural network layers—a process untenable in time-sensitive trials. Data scarcity compounds this issue; rare diseases or novel therapies lack sufficient training data, forcing reliance on synthetic datasets or transfer learning, which may not capture real-world complexity.

Integration challenges extend to legacy systems. Many hospitals still rely on fax-based communication and non-digital records, creating data silos that hinder AI’s data aggregation capabilities. Even when data are digitized, variability in coding practices—such as inconsistent use of SNOMED-CT or ICD-10 codes—limits algorithmic generalizability across institutions.

Security concerns add another layer of complexity. AI systems processing PHI (protected health information) must comply with HIPAA and GDPR, necessitating robust encryption and access controls. Yet, as shown in studies using federated learning, decentralized data architectures can mitigate risk by training models on local servers without transferring sensitive data. Such innovations exemplify the technical ingenuity required to align AI with clinical and ethical standards.

Ethical Crossroads: Privacy, Bias, and Informed Consent

Ethical risks in AI-driven recruitment cluster around privacy, transparency, and justice. Privacy breaches pose existential threats, as algorithms often require access to granular health data—genetic markers, imaging studies, social determinants—to function optimally. Anonymization techniques, while standard, are increasingly vulnerable to re-identification attacks, prompting calls for differential privacy measures that add statistical noise to datasets, masking individual identities without compromising analytic utility.

Bias and discrimination emerge as pervasive concerns. AI models trained on skewed datasets—overrepresenting white, male, or urban populations—risk perpetuating these biases in trial enrollment. For instance, a cardiovascular trial algorithm prioritizing historical data from middle-aged men may exclude women presenting atypical symptom profiles, exacerbating gender disparities in cardiac research. Mitigating such bias requires proactive curation of diverse training data and algorithmic audits for fairness.

Informed consent processes must evolve to address AI’s role. Traditional consent forms rarely disclose algorithmic involvement, leaving participants unaware of how their data train or refine models. Dynamic consent frameworks, enabling real-time opt-outs and granular data permissions, offer a solution but demand robust digital infrastructure. Transparency extends to clinicians; tools like WCTM must elucidate match rationale to prevent blind reliance on AI recommendations.

Regulatory gaps further complicate ethical deployment. While agencies like the FDA endorse AI as a “breakthrough” technology, few guidelines address its trial-specific applications. Intellectual property disputes—over who owns AI-generated insights—add legal uncertainty. Collaborative efforts, such as the EU’s Ethics Guidelines for Trustworthy AI, provide blueprints but lack enforcement mechanisms, leaving institutions to navigate ethical gray areas autonomously.

The path forward demands multidisciplinary coalitions. Ethicists, data scientists, and patient advocates must co-design AI systems that prioritize equity, transparency, and participant agency. Only through such collaboration can AI fulfill its potential as an ethical accelerator of clinical research.

The Retention Gap: AI’s Uncharted Frontier

While AI’s impact on recruitment is well-documented, its potential to improve retention remains virtually untapped. Participant dropout, which plagues up to 40% of trials, undermines statistical power and inflates costs. AI offers novel solutions: predictive analytics could flag at-risk participants via digital biomarkers—changes in medication adherence, mood patterns, or wearable device data—enabling timely interventions.

Startups like Brite Health and AiCure pioneer such applications. Brite’s platform analyzes EHR and patient-reported data to predict dropout likelihood, triggering personalized reminders or support resources. AiCure’s computer vision technology verifies medication ingestion via smartphone cameras, ensuring protocol compliance. These tools exemplify AI’s capacity to transform retention from reactive to proactive.

Technical challenges abound. Retention algorithms require continuous data streams—a feat complicated by fragmented digital health ecosystems. Integrating wearable data with EHRs, for instance, demands interoperability standards absent in most healthcare settings. Privacy concerns escalate with continuous monitoring, necessitating novel consent frameworks for longitudinal data use.

Cultural barriers also impede adoption. Clinicians accustomed to traditional retention strategies—phone calls, in-person visits—may resist AI-driven approaches. Demonstrating efficacy through randomized trials is critical but resource-intensive. Early pilots, such as remote monitoring in HIV trials, show promise but lack scalability.

The absence of retention-focused AI research underscores a systemic blind spot. Funding agencies and journals must incentivize studies addressing this gap, ensuring AI’s benefits extend beyond enrollment to encompass trial completion. As retention becomes the next frontier, AI’s role in sustaining participant engagement will define its legacy in clinical research.

Standardization and Global Equity: Future Imperatives

The future of AI in clinical trials hinges on standardization. Heterogeneous outcome measures—ranging from enrollment rates to algorithmic accuracy—complicate cross-study comparisons. Initiatives like SPIRIT-AI and CONSORT-AI aim to harmonize reporting standards, ensuring transparency in AI development and validation processes. Adoption of these guidelines, while voluntary, is critical for building stakeholder trust.

Global equity demands urgent attention. Most AI tools are developed in high-income countries, reflecting disparities in data infrastructure and technical expertise. Transferring these tools to low-resource settings risks algorithmic colonialism—imposing biased models on underrepresented populations. Localized AI development, leveraging region-specific data and priorities, is essential. Partnerships, such as Africa’s SANTHE AI initiative, demonstrate the feasibility of context-sensitive solutions.

Methodological rigor must improve. Current studies often lack external validation, overestimating AI performance in controlled environments. Prospective trials comparing AI-driven recruitment to traditional methods are rare but necessary. Longitudinal studies tracking participant outcomes post-enrollment will clarify AI’s impact on trial success rates and patient safety.

Regulatory evolution is equally vital. Agencies must establish pathways for AI approval, balancing innovation with risk mitigation. Adaptive licensing frameworks, allowing incremental algorithm updates without full re-evaluation, could accelerate iteration while ensuring safety. International coalitions, akin to the International Council for Harmonisation, should harmonize AI regulations to prevent jurisdictional fragmentation.

Ultimately, AI’s success in clinical trials depends on its integration into a human-centric ecosystem. Technologies must augment, not replace, clinician judgment and patient autonomy. By addressing ethical, technical, and equity challenges, AI can transcend its current limitations, ushering in an era of faster, fairer, and more impactful clinical research.

Study DOI: https://doi.org/10.1093/jamia/ocae243

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Clinical Operations

Beyond the Intervention: Deconstructing the Science of Healthcare Improvement

Improvement science is not a discipline in search of purity. It is a field forged in the crucible of complexity.

Clinical Operations

Translating Innovation into Practice: The Silent Legal Forces Behind Clinical Quality Reform

As public health increasingly intersects with clinical care, the ability to scale proven interventions becomes a core competency.

Read More Articles

Aerogel Pharmaceutics Reimagined: How Chitosan-Based Aerogels and Hybrid Computational Models Are Reshaping Nasal Drug Delivery Systems

Simulating with precision and formulating with insight, the future of pharmacology becomes not just predictive but programmable, one cell at a time.

Coprocessed for Compression: Reengineering Metformin Hydrochloride with Hydroxypropyl Cellulose via Coprecipitation for Direct Compression Enhancement

In manufacturing, minimizing granulation lines, drying tunnels, and multiple milling stages reduces equipment costs, process footprint, and energy consumption.