The Bottlenecks of Traditional Clinical Trials

Clinical trials are among the most resource-intensive processes in modern medicine. Every phase—from patient recruitment and randomization to treatment administration and endpoint assessment—presents logistical and scientific hurdles. Protocol deviations, unexpected adverse events, and inefficient patient monitoring contribute to delays, often stretching trial durations far beyond initial projections. These inefficiencies inflate costs, slow down drug approvals, and create barriers to timely patient access to life-saving treatments.

Recruitment and retention represent some of the most persistent challenges. Many trials struggle to enroll a sufficiently diverse patient population, and high dropout rates exacerbate the problem. Failure to meet recruitment targets often leads to underpowered studies, forcing costly extensions or, in some cases, outright termination of the trial. Conventional approaches, such as site-based advertising and physician referrals, have shown diminishing returns as patient expectations shift towards more personalized and digital-first experiences.

Another critical bottleneck is suboptimal trial design. The standard one-size-fits-all approach often lacks adaptability, failing to adjust to real-time patient responses or evolving clinical evidence. Inefficient dosage adjustments, rigid inclusion criteria, and predetermined treatment pathways lead to unnecessary patient risk and wasted resources. Traditional clinical trial workflows demand a paradigm shift—one that embraces data-driven decision-making and continuous optimization.

Reinforcement Learning: The AI Paradigm Reshaping Clinical Research

Reinforcement learning (RL), a subset of artificial intelligence (AI), is uniquely suited to address the complexities of clinical trial optimization. Unlike traditional machine learning, which relies on static datasets, RL enables an autonomous agent to learn by interacting with its environment. Through trial and error, the system refines its decision-making strategy, optimizing outcomes based on predefined reward signals. In the context of clinical trials, this means dynamically adjusting protocols in response to real-time patient data.

One of RL’s greatest strengths is its ability to balance exploration and exploitation. In clinical trials, exploration refers to testing new therapeutic strategies, while exploitation involves leveraging existing knowledge to maximize patient benefit. RL algorithms navigate this balance by continuously refining treatment regimens, allocating resources more efficiently, and prioritizing patient safety without compromising scientific rigor. This adaptability is particularly valuable in dose-finding studies, where RL models can dynamically adjust dosing strategies to minimize toxicity while maintaining efficacy.

The application of RL extends beyond treatment decisions. It can optimize trial site selection by analyzing historical data on site performance, patient demographics, and logistical constraints. By predicting which locations will yield the highest recruitment success and data quality, RL-driven site selection reduces trial delays and improves operational efficiency. These capabilities position reinforcement learning as a powerful tool for modernizing clinical research methodologies.

Optimizing Patient Recruitment and Retention with AI

Patient recruitment remains a significant barrier to trial success, with nearly half of all clinical trials failing to meet their enrollment targets. RL-driven recruitment strategies leverage predictive modeling to identify the most promising candidates based on genetic, demographic, and behavioral data. By analyzing electronic health records (EHRs), social media interactions, and past participation patterns, RL algorithms can personalize outreach efforts, ensuring that recruitment campaigns are targeted and effective.

Beyond identifying eligible participants, RL enhances patient engagement through adaptive retention strategies. Traditional retention methods—such as periodic reminders and standardized follow-ups—often fail to address the individual needs of participants. RL models continuously adjust engagement tactics based on patient responses, optimizing communication frequency, preferred channels, and incentive structures. This personalized approach significantly reduces dropout rates and improves long-term adherence.

Additionally, RL enables real-time intervention when patients exhibit signs of disengagement. If a participant shows declining compliance—such as missing scheduled medication doses or skipping virtual check-ins—the algorithm can trigger proactive interventions. These may include customized support messages, automated appointment rescheduling, or personalized motivational strategies. By addressing retention challenges dynamically, RL minimizes attrition, ensuring trial integrity and statistical power.

Reinforcement Learning in Adaptive Trial Designs

Traditional clinical trials operate on rigid protocols, often failing to incorporate real-time data insights. Adaptive trial designs seek to address this issue by allowing modifications based on interim findings, improving efficiency and patient safety. RL serves as the perfect optimization framework for these designs, enabling real-time protocol adjustments based on evolving evidence.

One of the most promising applications of RL is in dynamic treatment allocation. Instead of assigning patients to fixed treatment arms, RL models continuously update allocation probabilities based on patient responses. This ensures that more participants receive the most effective therapies while minimizing exposure to suboptimal treatments. The approach is particularly relevant in oncology trials, where response heterogeneity makes fixed treatment assignments inefficient.

RL-driven adaptive designs also improve dose optimization. In traditional dose-escalation studies, researchers rely on pre-specified dose levels, often leading to unnecessary toxicity or under-dosing. RL algorithms dynamically adjust dosing schedules by learning from real-time pharmacokinetic and pharmacodynamic data, maximizing therapeutic benefits while minimizing adverse effects. This methodology significantly accelerates the identification of optimal dosing strategies, reducing trial duration and patient risk.

Ethical and Regulatory Considerations of AI-Optimized Trials

As RL reshapes clinical trial workflows, regulatory agencies must adapt their frameworks to accommodate AI-driven methodologies. Traditional approval processes rely on predefined statistical models and manual oversight, whereas RL introduces an element of real-time decision-making that challenges existing norms. Regulators are now faced with the task of ensuring that AI-optimized trials maintain transparency, reproducibility, and ethical integrity.

One primary concern is algorithmic interpretability. RL models often function as “black boxes,” making it difficult to trace the rationale behind certain decisions. To address this, researchers are developing explainable AI (XAI) techniques that provide human-readable justifications for RL-generated recommendations. Transparent decision-making is essential for building trust among clinicians, regulatory bodies, and trial participants.

Another ethical consideration is equity in trial participation. AI models trained on biased datasets may inadvertently reinforce existing disparities in patient access. Ensuring diversity in training data and incorporating fairness-aware algorithms is critical to preventing RL-driven trials from disproportionately favoring certain demographic groups. Regulators must implement guidelines that mandate fairness audits and bias detection in AI-driven clinical research.

Sustainability and Environmental Impact of RL-Optimized Trials

Clinical trials are not only resource-intensive but also environmentally burdensome. The traditional trial model involves frequent patient travel, high energy consumption at trial sites, and extensive paperwork—all of which contribute to a substantial carbon footprint. RL-driven trial optimization offers a pathway to sustainability by reducing waste and streamlining operations.

One of the most significant environmental benefits is reduced patient travel. RL-optimized recruitment strategies enable geographically distributed trial participation, minimizing the need for long-distance travel. Additionally, AI-driven monitoring allows for increased use of remote patient assessments, reducing the reliance on physical trial sites. This shift towards decentralized trials lowers transportation emissions and enhances patient convenience.

RL also enhances resource allocation efficiency. By dynamically adjusting trial protocols to eliminate redundancies, AI reduces unnecessary data collection, laboratory tests, and on-site personnel requirements. This leads to lower energy consumption and reduced material waste. Furthermore, by expediting trial completion timelines, RL minimizes the prolonged utilization of resources, making clinical research more environmentally sustainable.

Future Challenges and the Path Forward

Despite its immense potential, RL-based clinical trial optimization is still in its early stages, and several challenges must be addressed before widespread adoption. One major hurdle is data quality and integration. Clinical trials generate vast amounts of heterogeneous data from various sources—EHRs, wearable devices, genomic sequencing, and imaging studies. Ensuring seamless data integration while maintaining accuracy remains a pressing challenge.

Another issue is scalability and generalizability. While RL algorithms can optimize specific aspects of a given trial, generalizing these models across different therapeutic areas and trial designs requires further refinement. Developing standardized RL frameworks that can be applied universally will be essential for broader adoption in the pharmaceutical industry.

Regulatory alignment will also shape the future of RL in clinical trials. The industry must work closely with agencies like the FDA and EMA to establish guidelines that balance AI-driven adaptability with rigorous oversight. Collaboration between data scientists, clinicians, and policymakers will be crucial to ensuring that RL-driven innovations enhance—not compromise—clinical trial integrity.

The AI-Powered Future of Clinical Trials

Reinforcement learning is poised to revolutionize clinical trial workflows, optimizing every stage from patient recruitment to adaptive treatment protocols. By leveraging AI-driven decision-making, RL enhances efficiency, reduces costs, and improves patient outcomes. However, successful implementation will require careful navigation of ethical, regulatory, and technical challenges.

As AI continues to reshape clinical research, interdisciplinary collaboration will be key. The fusion of computational science, biomedical expertise, and regulatory insight will drive the next generation of clinical trials—ones that are faster, more adaptive, and more patient-centric. The future of drug development is not just data-driven; it is reinforcement-learning-powered.

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Clinical Operations

Beyond the Intervention: Deconstructing the Science of Healthcare Improvement

Improvement science is not a discipline in search of purity. It is a field forged in the crucible of complexity.

Clinical Operations

Translating Innovation into Practice: The Silent Legal Forces Behind Clinical Quality Reform

As public health increasingly intersects with clinical care, the ability to scale proven interventions becomes a core competency.

Read More Articles

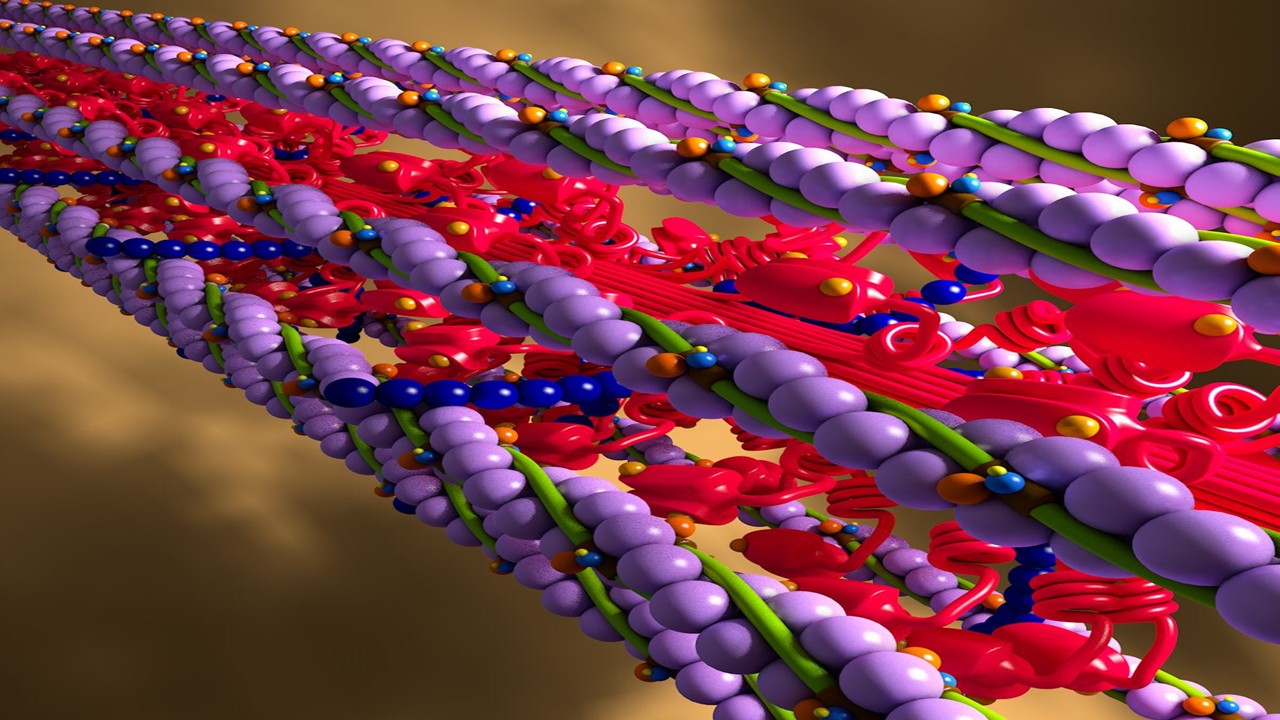

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.