The Surge of AI in Clinical Trials

The integration of artificial intelligence into clinical practice has sparked a wave of randomized controlled trials (RCTs), reflecting both optimism and caution. Over 80% of these trials report positive primary endpoints, with deep learning systems dominating applications in medical imaging, particularly gastroenterology and radiology. These systems often focus on diagnostic yield, such as polyp detection in endoscopy or tumor characterization in radiology, yet their real-world clinical utility remains under scrutiny. Single-center designs, limited demographic reporting, and a lack of multicenter collaboration raise questions about the generalizability of findings. Despite promising results, the specter of publication bias looms, as negative outcomes or inconclusive trials may languish in obscurity, skewing the perceived success of AI interventions.

Gastroenterology emerges as a focal point, with video-based deep learning algorithms assisting clinicians in real-time lesion detection during endoscopic procedures. These trials, largely concentrated in China and the U.S., highlight the technical prowess of AI but also reveal homogeneity in study design and investigator groups. Radiology trials, though fewer, emphasize image-based diagnostics, where AI augments radiologists’ interpretations of mammograms or CT scans. Outside imaging, AI applications in structured data—such as electronic health records—leverage decision trees and reinforcement learning for tasks like insulin dosing or sepsis prediction. However, the clinical relevance of these tools hinges on seamless integration into workflows, a challenge underscored by inconsistent operational efficiency outcomes.

The predominance of industry-sponsored trials underscores the commercial race to deploy AI, yet academic contributions remain vital for foundational research. Regulatory approvals for AI-enabled devices often precede rigorous RCT validation, creating a gap between innovation and evidence. For instance, AI systems for diabetic retinopathy screening demonstrate high diagnostic accuracy in trials but face hurdles in scalability across diverse healthcare settings. This disconnect between controlled trials and real-world implementation underscores the need for pragmatic study designs that mirror clinical complexity.

Ethical considerations, such as algorithmic bias and patient privacy, are often sidelined in favor of technical performance metrics. Trials rarely report demographic diversity, with race and ethnicity data omitted in over 75% of studies, potentially masking disparities in AI efficacy. The absence of standardized reporting guidelines, like CONSORT-AI, further complicates cross-trial comparisons. These gaps threaten to perpetuate inequities, particularly if AI tools are trained on non-representative datasets.

The rapid doubling of AI RCTs since 2021 signals a maturing field but also a pressing need for methodological rigor. Future trials must balance innovation with accountability, ensuring AI’s benefits are both measurable and equitable.

Geographical Imbalances and Specialty Dominance

The U.S. and China lead AI clinical trials, accounting for over 60% of studies, yet their focuses diverge sharply. American trials span diverse specialties—oncology, cardiology, and primary care—while Chinese research clusters around gastroenterology, driven by a handful of academic and corporate groups. This geographical asymmetry reflects differing healthcare priorities and regulatory landscapes, with China prioritizing high-volume procedural specialties and the U.S. exploring broader applications. European trials, though fewer, emphasize multinational collaborations, aiming to validate AI tools across heterogeneous populations.

Gastroenterology’s dominance is emblematic of AI’s affinity for procedural specialties, where video data from endoscopies provides rich training material for deep learning models. These trials often measure success through lesion detection rates, yet few address long-term patient outcomes like cancer survival. Radiology, another AI hotspot, focuses on diagnostic accuracy but struggles with workflow integration, as radiologists weigh AI’s speed against the cognitive load of interpreting alerts.

Surgical and cardiology trials, though fewer, explore AI’s role in predictive analytics, such as forecasting postoperative complications or arrhythmias. These applications face unique challenges, including the dynamic nature of intraoperative decision-making and the need for real-time model adaptability. In contrast, primary care remains underrepresented, highlighting a gap in AI’s potential to address chronic disease management or preventive care at scale.

The concentration of trials in high-income countries neglects low-resource settings where AI could mitigate workforce shortages. Initiatives in sub-Saharan Africa or South Asia, though nascent, demonstrate AI’s promise in tuberculosis screening or maternal health, yet these regions contribute minimally to the RCT landscape. This imbalance risks entrenching a “AI divide,” where cutting-edge tools cater to well-resourced systems while neglecting global health priorities.

Multicenter trials remain rare, with 63% of studies conducted at single sites, limiting insights into AI’s performance across institutions. Efforts like the STANDING Together consortium aim to standardize demographic reporting and validation frameworks, yet adoption is slow. Without harmonized protocols, AI’s promise as a global health tool remains unfulfilled.

Diagnostic Performance vs. Patient Outcomes

AI’s prowess in diagnostic accuracy dominates trial endpoints, yet this narrow focus obscures its impact on patient-centered outcomes. Over 70% of trials prioritize metrics like polyp detection rates or tumor segmentation accuracy, while fewer than 20% assess symptoms, quality of life, or survival. This discrepancy reveals a critical blind spot: superior diagnostic performance does not inherently translate to better patient care. For example, AI-assisted colonoscopies increase adenoma detection but fail to reduce interval cancers, underscoring the need for longitudinal studies.

Trials evaluating AI’s effect on care management—such as glycemic control in diabetes or hypotension monitoring in critical care—demonstrate mixed results. While some systems reduce time-in-target-range deviations, others introduce workflow inefficiencies, lengthening clinician interaction times. Similarly, AI tools predicting diabetic retinopathy risk improve referral adherence but show negligible effects on vision preservation, highlighting the gap between process and outcome measures.

Patient behavior and symptom management trials reveal AI’s potential to personalize interventions, such as tailoring physiotherapy regimens via motion sensors or optimizing analgesia using intraoperative nociception monitoring. These applications, however, struggle with patient engagement and data privacy concerns, particularly when AI recommendations conflict with clinician judgment. The ethical implications of AI-driven nudges—such as encouraging end-of-life conversations—remain underexplored, despite their profound impact on care trajectories.

Clinical decision-making trials illustrate AI’s dual role as both collaborator and disruptor. Systems providing mortality predictions in oncology increase serious illness conversations but fail to alter treatment plans, suggesting AI’s influence is nuanced. Conversely, AI tools for stroke risk stratification in atrial fibrillation show no effect on anticoagulant prescribing, exposing the limits of predictive analytics without contextual integration.

The emphasis on technical endpoints risks reducing AI to a diagnostic crutch rather than a therapeutic partner. Future trials must prioritize patient-relevant outcomes, such as morbidity, mortality, and functional status, to align AI’s capabilities with clinical priorities.

Operational Efficiency: A Double-Edged Sword

AI’s impact on clinical workflows is paradoxical, with 35% of trials reporting reduced operational times and 25% noting increases. In radiology and ophthalmology, AI streamlines tasks like image analysis, slashing interpretation times by automating routine measurements. Conversely, gastroenterology trials often report prolonged procedure durations, as clinicians pause to verify AI-generated lesion alerts, balancing caution against efficiency.

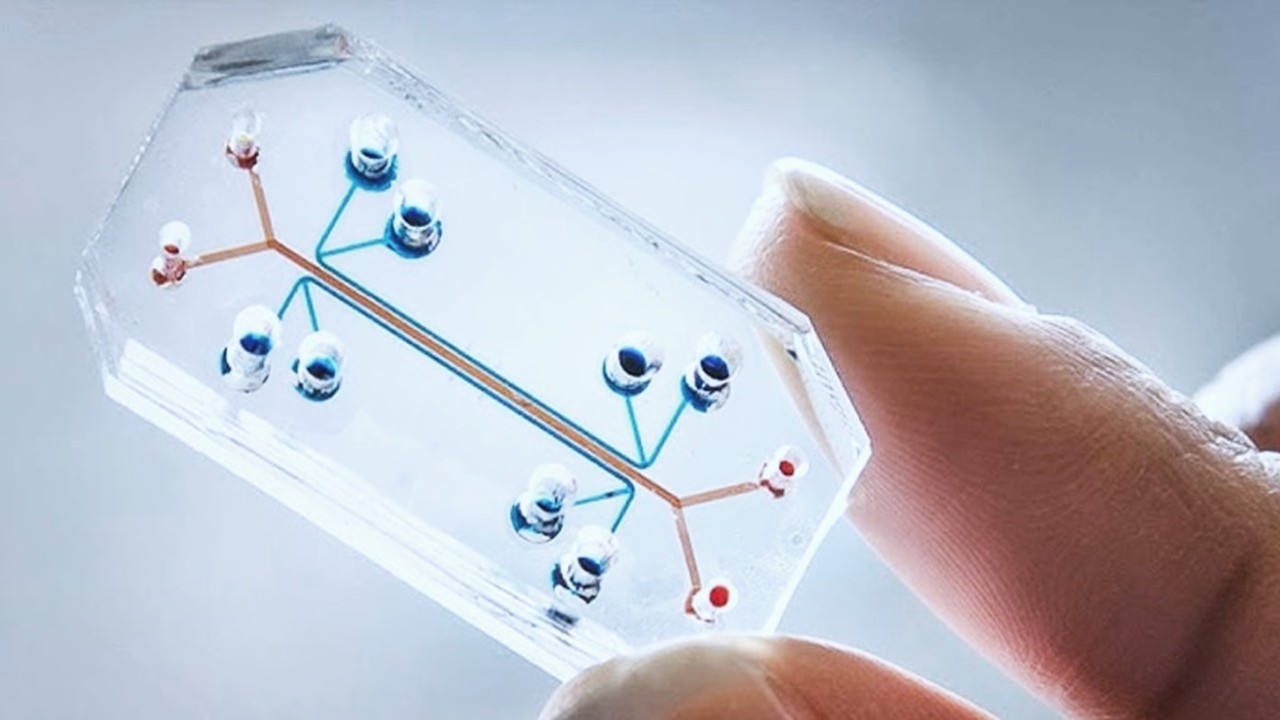

The variability stems from task complexity and integration design. AI systems operating in the background—such as automated insulin dosing—seamlessly enhance efficiency, while those requiring active clinician interaction—like endoscopy assistants—introduce decision-making overhead. Tools leveraging structured data, such as EHR alerts, face usability challenges, with clinicians dismissing alerts due to poor interface design or mistrust.

Operational efficiency metrics also neglect hidden costs, such as the time required for AI model training or data preprocessing. Trials rarely address the resource burden of maintaining AI infrastructure, from cloud storage to computational hardware, which may offset perceived efficiency gains. Furthermore, the learning curve associated with AI tools—often unmeasured in trials—can temporarily degrade workflow efficiency during implementation.

The lack of standardized efficiency metrics complicates cross-trial comparisons. While some studies measure time-to-diagnosis or documentation speed, others focus on resource utilization, such as reduced imaging repeats. Harmonizing these endpoints is critical for evaluating AI’s true operational value. Efficiency gains must be weighed against clinician burnout risks. Alert fatigue, exemplified by a sepsis prediction model generating excessive false alarms, erodes trust and compounds workload. Future AI systems must prioritize specificity and contextual relevance to avoid undermining their own utility.

The Imperative for Diversity and Generalizability

Current AI trials suffer from homogeneity, with over 80% conducted in single countries and fewer than 5% reporting multiethnic cohorts. In the U.S., trials predominantly enroll White participants, while Chinese trials focus on Han populations, neglecting global demographic diversity. This insularity risks deploying AI tools that falter in underrepresented groups, perpetuating healthcare disparities.

The underrepresentation of pediatrics, geriatrics, and marginalized communities further limits AI’s applicability. Trials in oncology and cardiology skew toward middle-aged adults, neglecting age-specific disease presentations. Similarly, AI models trained on urban hospital data may fail in rural or low-resource settings, where comorbidities and resource constraints differ.

Efforts to diversify training datasets are nascent but critical. Federated learning, which pools data across institutions without centralizing sensitive information, offers a path to broader representation. However, technical and regulatory barriers—such as data privacy laws and incompatible EHR systems—hinder adoption. Demographic transparency is equally vital. Only 22 trials in this review reported race or ethnicity, and even fewer detailed socioeconomic factors. Initiatives like the STANDING Together framework advocate for standardized reporting, urging trials to disclose dataset demographics and validation across subgroups.

Global collaboration is essential to democratize AI research. Multinational consortia could align priorities with regional health needs, ensuring AI addresses conditions like malaria or maternal mortality alongside high-income foci. Without inclusivity, AI risks becoming a tool of inequity rather than empowerment.

Ethical Frontiers: Bias, Autonomy, and Accountability

Algorithmic bias remains a persistent threat, yet fewer than 10% of trials address mitigation strategies. Models trained on homogeneous datasets may misdiagnose conditions like skin cancer in darker skin tones or underestimate cardiovascular risk in women. Retrospective audits of deployed systems, such as a sepsis prediction tool exhibiting racial bias, reveal the consequences of inadequate diversity in training data.

Patient autonomy emerges as a ethical flashpoint in AI-driven decision-making. Trials exploring AI’s role in end-of-life planning or mental health interventions report increased clinician-patient discussions but raise concerns about algorithmic determinism. When AI predictions influence care plans, patients and providers must grapple with the opacity of “black-box” models, challenging informed consent. Accountability frameworks for AI errors are absent from most trials. Unlike human clinicians, AI systems lack malpractice insurance or licensure, complicating liability in adverse outcomes. The regulatory vacuum surrounding AI’s clinical role exacerbates these uncertainties, with few trials proposing governance models.

Data privacy risks escalate as AI tools process sensitive health information. While trials anonymize data, real-world deployments must guard against re-identification, particularly with wearable devices transmitting continuous biometric data. Encryption and federated learning offer partial solutions, but ethical trials must prioritize patient data sovereignty.

The ethical imperative for AI extends beyond efficacy to equity, transparency, and respect for human agency. Trials must embed ethicists in design teams, ensuring AI serves as a tool for enhancement rather than replacement of clinical judgment.

Future Directions: From Bench to Bedside

The next generation of AI trials must prioritize multicenter designs to validate generalizability. Projects like the EU’s MELODDY consortium, which shares preclinical data across borders, exemplify collaborative potential. Similarly, adaptive trial designs could evaluate AI’s evolving performance as models learn from real-world data.

Patient-relevant endpoints—mortality, functional recovery, quality of life—must supplant surrogate markers. Longitudinal studies tracking AI’s impact on chronic disease progression or survivorship will bridge the gap between diagnosis and outcome. Interoperability standards, such as FHIR for EHRs, are critical for scaling AI tools. Seamless integration reduces implementation friction, allowing clinicians to focus on care rather than software navigation.

Regulatory reforms should incentivize negative-result publications and mandate demographic reporting. Public-private partnerships could fund trials in underrepresented specialties or regions, aligning innovation with global health needs.

Ultimately, AI’s success in healthcare hinges on its ability to complement—not replace—the human touch. By anchoring trials in clinical relevance and ethical rigor, AI can fulfill its promise as a transformative ally in medicine.

Study DOI: https://doi.org/10.1016/S2589-7500(24)00047-5

Engr. Dex Marco Tiu Guibelondo, B.Sc. Pharm, R.Ph., B.Sc. CpE

Subscribe

to get our

LATEST NEWS

Related Posts

Clinical Operations

Beyond the Intervention: Deconstructing the Science of Healthcare Improvement

Improvement science is not a discipline in search of purity. It is a field forged in the crucible of complexity.

Clinical Operations

Translating Innovation into Practice: The Silent Legal Forces Behind Clinical Quality Reform

As public health increasingly intersects with clinical care, the ability to scale proven interventions becomes a core competency.

Read More Articles

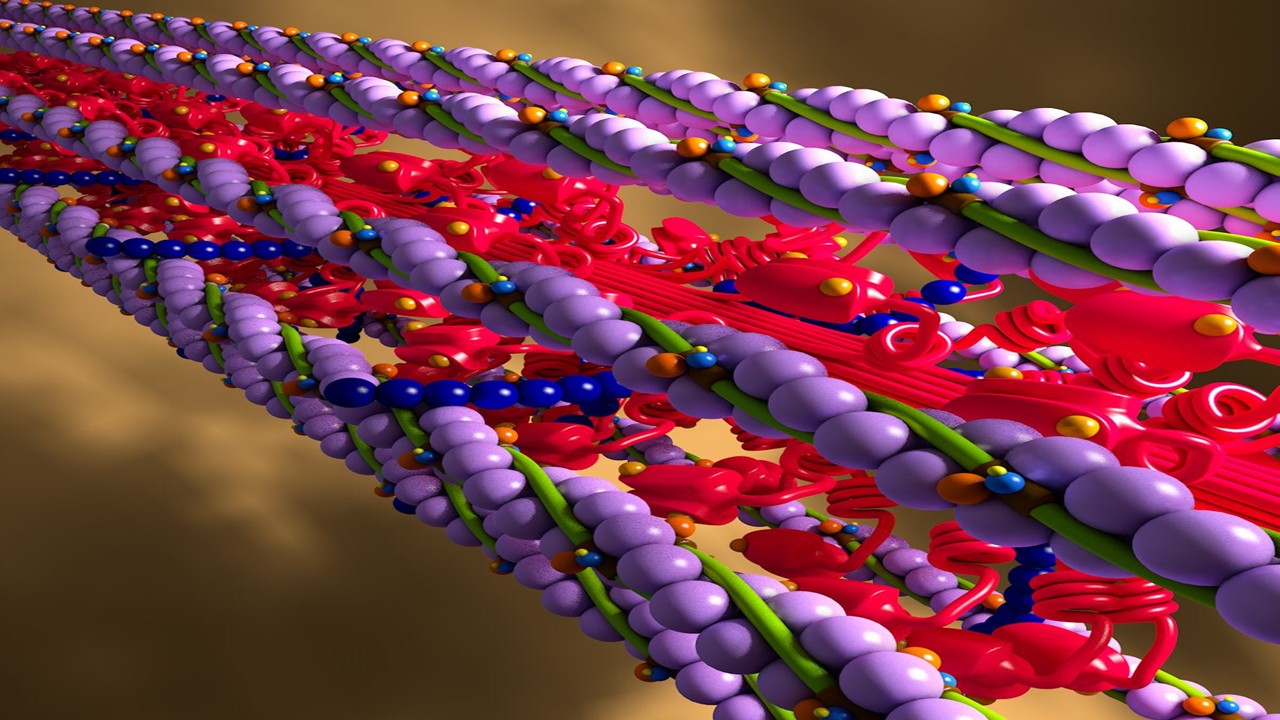

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.