Failure to reach the desired safety profile is one of the main reasons for delayed drug development. Advancements in preclinical studies have seen the optimisation of in vitro assays to better predict toxicity in drug discovery. Computational methods like in silico modelling show potential to introduce more rapid and cost-efficient toxicity screening, and address some of the limitations of current in vitro techniques.

Toxicity is a measure of any undesirable or adverse effect of chemicals. The chemical toxicity of a drug is a critical part of FDA approval which determines whether the therapeutic effect outweighs potential adverse effects. Toxicity failures of drug candidates are a concern in the pharmaceutical industry as it poses a threat to patient health, and also increases the overall cost of the drug development process.

• Irritation and corrosion

• Respiratory toxicity

• Endocrine disruption

• Acute oral toxicity

• Cardiotoxicity

• Hepatotoxicity

Hepatotoxicity is damage to the liver caused by exposure to chemical substances. It is the leading cause of drug failure in drug discovery. The mechanism by which the liver breaks down substances is complicated and varies between patients, hence hepatotoxicity is considered difficult to detect in preclinical and clinical trials.

Endocrine (hormonal system) disruption is also a concern, especially for drugs that interact with nuclear receptors including estrogen and androgen receptors. Nuclear receptors are a class of proteins responsible for sensing steroid and thyroid hormone among other specific molecules. Chemicals which disrupt this system could interfere with the normal functioning of these steroid hormones, which could lead to adverse health problems like reproductive disorders.

Current methods predicting drug toxicity

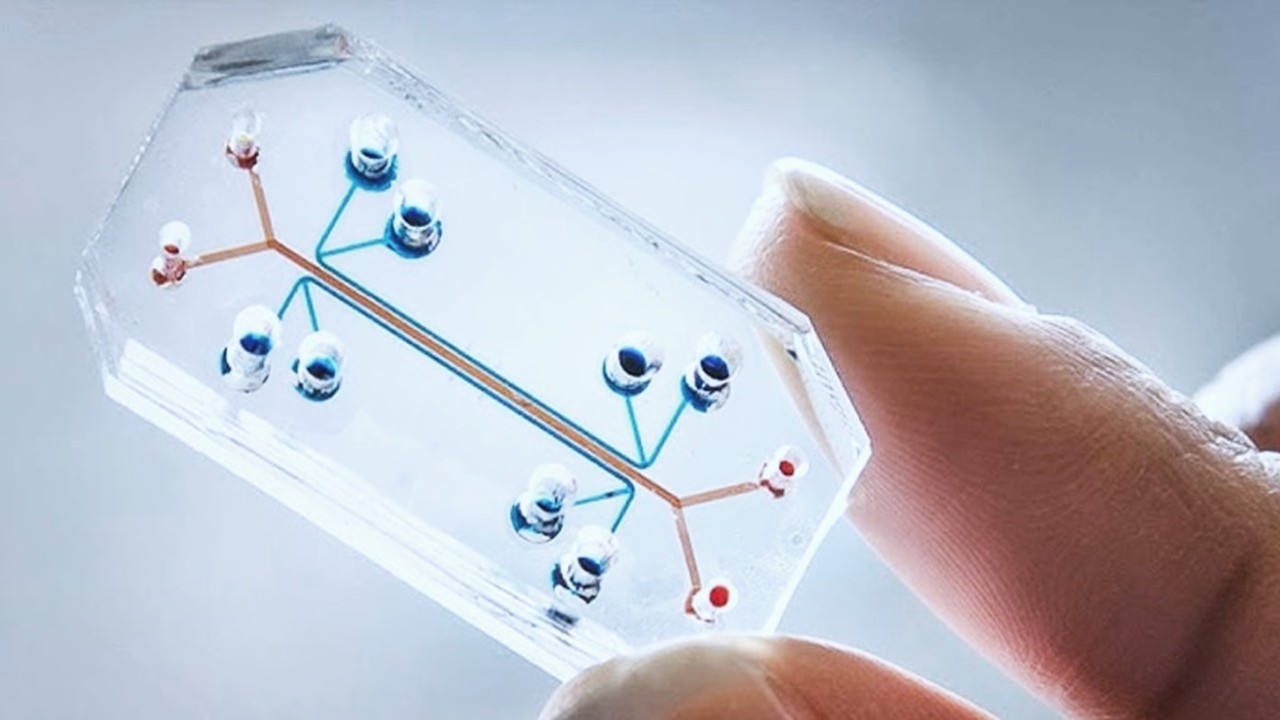

In vitro bioassays are the primary method by which drug toxicity is measured. In vitro toxicology examines the toxic properties of drugs in cells or tissues maintained in controlled lab conditions. Metabolic conversion is an important feature these assays require, as toxicity often arises through metabolic activity. In a 2018 review, traditional toxicity bioassays were said to be “often inaccurate when accounting for reactive metabolites”. High-throughput screening (HTS) and high content analysis (HCA) are now the most popular methods for toxicity drug assessment.

HTS platforms use cell-based assays which measure biological processes such as cell death/growth, protein expression and receptor binding. The output from HTS provides data to make a decision about drug design and generate lead compounds with desired physicochemical properties for the target therapeutic effect.

These assays are important in preclinical studies to prevent a drug from going further through drug development, should the safety profile not meet the appropriate parameters. This reduces the cost of drug discovery, preventing further preclinical studies, and also reduces the need for animal models.

Other HTS platforms use cell-free assays which can still study biochemical activity. In a 2020 Nature article, it is highlighted that “both formats use a variety of detection technologies including fluorescence and luminescence readouts.” A 2018 study used a combination of organotypic culture models and HTS assay to evaluate chemical toxicity to a full extent but in a more rapid and cost-effective manner.

An organotypic culture is resected tissue from an organ which preserves the architecture and cellular interaction for further experimentation. This study in particular investigated the toxicant response to multicellular human or rodent liver cells. Their rationale for this decision was to address the challenge of identifying hepatotoxicity in a model which more closely resembles in vivo interactions.

Despite the success with HTS and HCA, the data set from toxicology studies using these methods is so large and complex, it can become a difficult task to process using available data management tools. This was a point emphasised in a 2014 review, which emphasised it is important to develop “a big data approach” for processing these studies.

More evolved data management tools will help to collate the data extracted into a response profile. This is an important part of toxicology studies which gives detailed information about the drug’s interaction with relevant biological proteins. Automatic data processing was highlighted as a recent method “developed to identify hits (i.e., actives or toxic compounds) from quality-assured HTS data and to increase the data dissemination and reproducibility”.

Novel data mining was another method that was suggested to be developed in order to extract useful data from various resources. Data mining refers to a process by which specific trends or patterns are identified in large data sets. This is usually facilitated by software which identifies patterns in large datasets.

In silico prediction of chemical toxicity

In silico bioassays are an important tool needed to “predict and limit interferent compounds being misinterpreted in fluorescent assay technologies”. In comparison to in vitro assays, computational methods are more rapid, cost-efficient, environmentally sustainable and can be performed before a compound is synthesised. Structural alerts (SAs) are an example of a type of in silico modelling. According to a review, these are chemical structures which indicate or associate with toxicity. SAs are useful in drug design in terms of how compounds can be altered to reduce their toxicity.

Using SAs to predict toxicity allows pharmacologists to identify the structure of potential metabolites implicated in mechanisms that lead to toxicity. Unfortunately, there are a number of limitations highlighted in the aforementioned review. SAs do not provide “insights into the biological pathways of toxicity and may not be sufficient for predicting toxicity”. This is of particular concern as it limits the ability to identify other potential targets a drug may interact with that could lead to adverse effects. Furthermore, “the list of SAs and rules may be incomplete, which may cause a large number of false negatives (i.e., toxic chemicals predicted as non‐toxic) in predictions”.

Another one of the main challenges with computational modelling is that the majority of mathematical models are constructed to simulate the physiology of an averaged cell population. This presents an issue as individual-cell interactions can vary greatly from the average cell population.

Computational methods like in silico modelling have the potential to revolutionise drug discovery, toxicity studies especially. By reducing the need for animal models which are expensive and time consuming, the hope is that computational biology will accelerate the drug development process.

Charlotte Di Salvo, Lead Medical Writer

PharmaFeatures

Subscribe

to get our

LATEST NEWS

Related Posts

Pharmacovigilance

Complexity of Clinical Decision Support Systems

In today’s healthcare, where choices can make all the difference, CDSSs shine as trusted guides.

Pharmacovigilance

Iatrogenic Disorders: A Hidden Risk of Medical Treatment

“All men make mistakes, but a good man yields when he knows his course is wrong, and repairs the evil. The only crime is pride.”— Sophocles, Antigone”

Read More Articles

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.