The life sciences industry may have felt the rush of the Big Data phenomenon later than other fields – but its significance was no less important. Advances in next-generation sequencing have made the data ambitions of yesteryear seem paltry to our current aspirations – we moved on from the Human Genome Project to now mapping out entire microbiomes and thousands of species. This, coupled with innovations in the other omics fields, as well as advances in smart technology that can accelerate the gathering of Real World Evidence – and the growing complexity of our own investigational products – have driven the generation of data to unprecedented levels. How we handle this data and maximize the value obtained from it remains a unique challenge going forward.

Multi-omics Approaches – A Growing Data Footprint

A good example of modern multi-omics data generation and management is the field of oncology. Cancers affect the body at all levels – from the genomic, to the epigenetic, transcriptomic and proteomic levels, and even epigenetics and metabolomics. Combined with novel high-throughput technologies to sequence and generate this omics data, the trend to approach our understanding of tumours through an approach that integrates multi-omics tools is on the rise. This can be illustrated through studies using proteomic understandings to identify critical genes in the development of colorectal cancers, or combining metabolomics and transcriptomics to identify oncogenic pathways for prostate cancer.

Oncology’s need for more composite and reliable biomarkers, as outlined above, is only set to increase. Our aspirations of predicting treatment responses in immunotherapy require data-rich environments, as does the trial design of clinical studies in the field. Read more on these topics in our interviews with Jessicca Rege and Amit Agarwhal. But multi-omics approaches raise many questions – the different omics fields not only generate large data sets, but ones that are also heterogeneous and require specialized tools for their integration. Still, choosing the right methods for integrative approaches remains a challenge.

Most current approaches involve dataset manipulation prior to integration – known as mixed integration – to reduce heterogeneity and accommodate downstream integration. Machine learning models are typically used at this stage, due to the complexity of designing models that can perform integrative analyses at earlier stages. Earlier integration tends to be more effective when datasets are smaller and more homogeneous to begin with – which often raises the question of whether larger data sets from more omics fields are truly necessary. This is due to the fact that the more omics layers are used in an integrative approach, the higher the likelihood of noisy data is. Integration of omics with non-omics – such as clinical – data remains yet more challenging. With the limits imposed by our current technologies, judiciousness and prudence in designing these approaches will be paramount to success. In the future, deep learning models show great promise in revolutionizing integrative strategies.

Real World Evidence (RWE) Ramps up Data Volumes

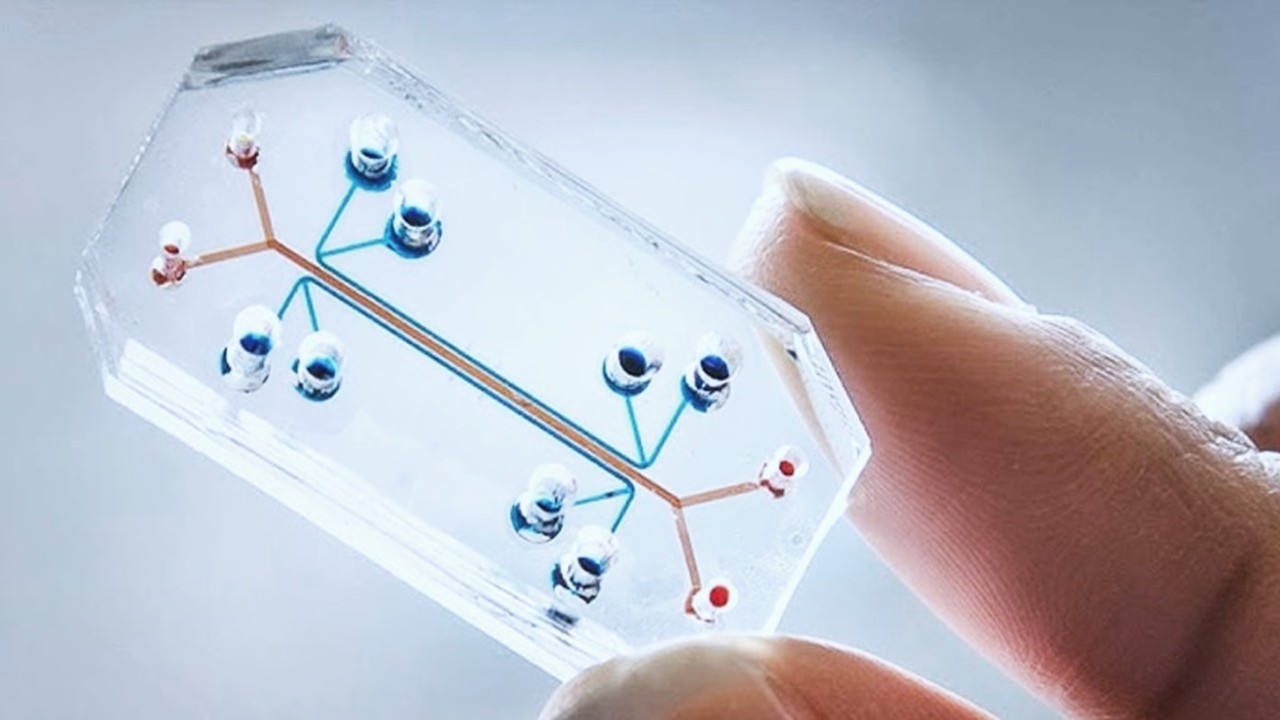

As the pandemic accelerated the appetite for innovation in clinical operations, we saw a phenomenal rise in the adoption of Decentralized Clinical Trials (DCTs) and nontraditional investigative methods and tools. This sparked further interest in the wider virtual trials space – particularly the concomitant use of smart technologies for real-time data gathering that they bring. DCTs, and their associated technologies, have proven that they offer advantages that make them solid contenders for the future. Real world evidence is here to stay, with both the FDA and the EMA indicating an interest in seeing greater adoption even before COVID-19.

RWE poses unique challenges however – even now there are no globally accepted standard definitions for Real World Data and Real World Evidence, and the difference between the two. Yet signals from regulatory agencies seem hopeful and greater cooperation can be expected in the future. However, the data footprint of RWE can be massive – which used to be, and sometimes still is, one of the deterrents for wider implementation. Sifting through every-day routine data can be daunting without sophisticated study and data handling designs.

The greater challenge in making proper use of RWE is the fragmented nature of our infrastructure – which has been noted as a problem in nations such as Japan and the United States. Effective database linkage and management will be crucial in extracting benefit from novel technologies. Defragmentation of data will require concerted efforts from across the industry and regulatory landscapes to produce deep data integration while remaining conscious of concerns such as privacy, security and trade interests.

Common Challenges

While the fields of multi-omics and RWE may present some of the biggest and most unique challenges in big data handling across the life sciences, they share many of the same obstacles towards future improvement – as does the entirety of the industry. Unlike other fields of science, such as physics where a lot of the big data enterprise is centered around a few colliders, biology has experienced a rapid transformation into big data science. This has brought about a decentralization of technologies such as sequencing and clinical data management. While the democratization of innovation in these ways is a noble affair, the need for improved standards, definitions and procedures for data handling and interpretation is much higher.

Concomitant to the more distributed nature of big data enterprise in the life sciences is the need for infrastructures that can support it. This includes more than just the technologies that can generate the needed data. Infrastructure such as improved software, cloud-based solutions and data storage facilities will need to be developed further. Innovators across the industry, such as IQVIA and Quantum are already paving the way forwards towards supporting infrastructural needs.

The rise of Artificial Intelligence and quantum computing also promise to not only generate more data, but also drastically expand our capabilities of data interpretation and handling. As the world approaches a point where more than one zettabase (a billion terabase) of sequence data may be generated per year, technological and computational advancements will be pivotal in ensuring we make the most of it.

Yet sometimes it is also a worthwhile endeavor to take an introspective approach and contemplate the quality of the data we use. Study design optimization has been the best approach to lowering the amount of data needed down to the most valuable parts of datasets. As biology navigates its own transformation to a big data enterprise, we must remember that data quality retains importance over data volume. Enriching the quality of data we gather will remain contingent on improving industry-wide, and regulatory, collaboration as well as technological advancements.

Nick Zoukas, Former Editor, PharmaFEATURES

Strategic partnerships and cooperation are the best approach to paving a way forward in an industry flooded with data. Join Proventa International’s Bioinformatics Strategy Meeting in Boston, 2022, to meet key stakeholders and participate in closed roundtable discussions on cutting-edge topics in the field, facilitated by world-renowned experts

Subscribe

to get our

LATEST NEWS

Related Posts

Bioinformatics & Multiomics

Mapping the Invisible Arrows: Unraveling Disease Causality Through Network Biology

What began as a methodological proposition—constructing causality through three structured networks—has evolved into a vision for the future of systems medicine.

Bioinformatics & Multiomics

Open-Source Bioinformatics: High-Resolution Analysis of Combinatorial Selection Dynamics

Combinatorial selection technologies are pivotal in molecular biology, facilitating biomolecule discovery through iterative enrichment and depletion.

Read More Articles

Myosin’s Molecular Toggle: How Dimerization of the Globular Tail Domain Controls the Motor Function of Myo5a

Myo5a exists in either an inhibited, triangulated rest or an extended, motile activation, each conformation dictated by the interplay between the GTD and its surroundings.

Designing Better Sugar Stoppers: Engineering Selective α-Glucosidase Inhibitors via Fragment-Based Dynamic Chemistry

One of the most pressing challenges in anti-diabetic therapy is reducing the unpleasant and often debilitating gastrointestinal side effects that accompany α-amylase inhibition.